The BoWI Simulator has been designed to explore and compare algorithms for Gesture and Posture recognition based on IMU and radio measurements. The first version was released in June 2013 and has been improved since. The first goal of the simulator is to generate synthetic signals equivalent to measurements corresponding to different scenarios. The second goal is to support algorithm exploration and comparison. The advantage of the approach is the repeatability of the scenarios while we can change any other parameter.

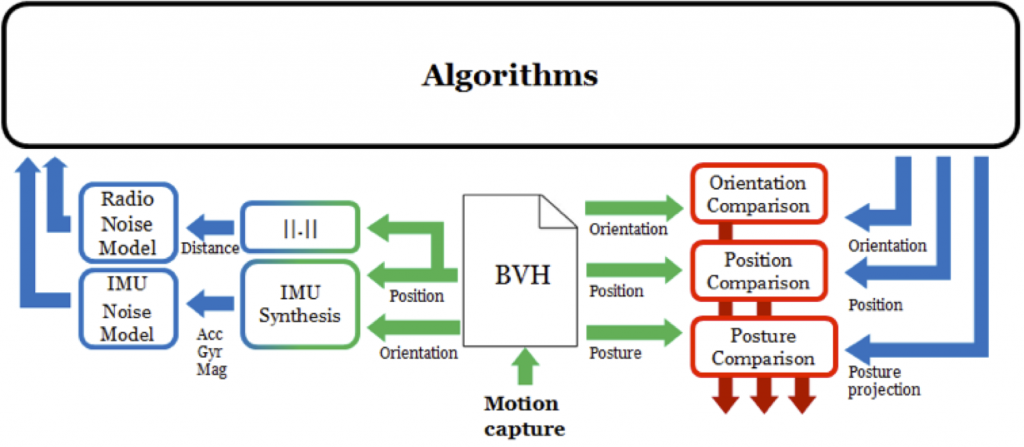

The simulator, whose organization is shown in Figure 1, uses standard BVH files that contain motions of an avatar that can be extracted from a motion capture system like Moven, and the description of a set of nodes positioned on the body. With such a capture the position and orientation of each joint of the avatar can be computed for each frame. It is then possible to compute ideal values of IMU. The real distances between any couple of points on the avatar are also accessible directly by computation. Then for each IMU axis an error compliant with noise models is added to generate realistic measurements. Based on these IMU and Radio models, noisy sensor data (attitude, speed, acceleration) are produced for every node and RSSI (radio received signal power) values are computed for every pair of nodes.

Outcomes

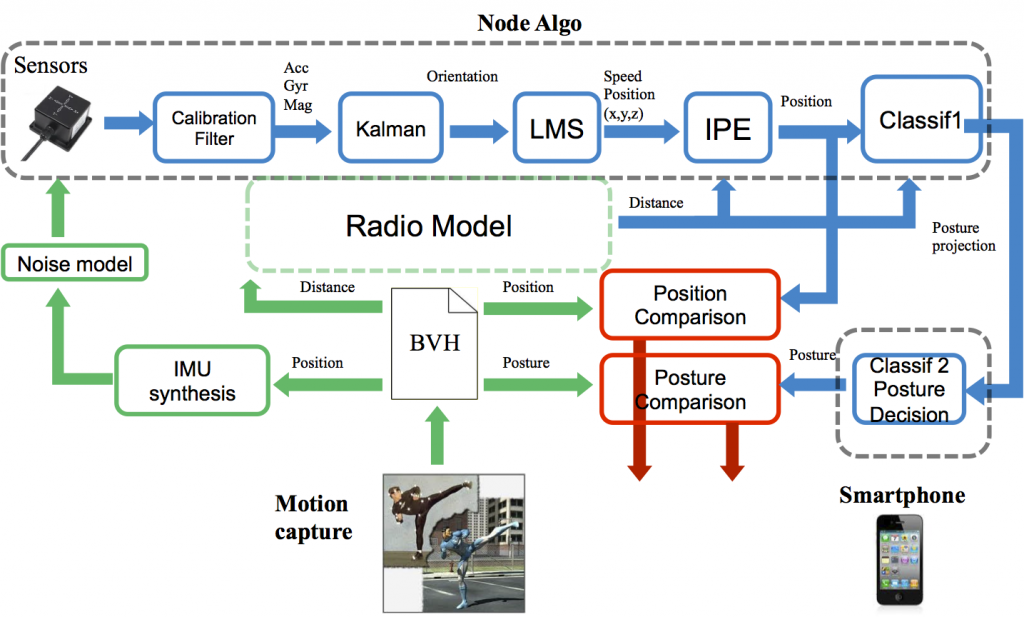

The simulator has been successfully used to compare and select a set of algorithms that have been partially implemented on the Zyggie V1 demonstrator and will be fully implemented on Zyggie V2. These algorithms are also necessary for the design of a dedicated Node Architecture that will be evaluated as candidate for an ultra low-power ASIC implementation. Figure 2 shows how the simulator can be used to test different algorithms in the context of gesture and posture identification. The algorithms first tested using the simulator were:

- The Extended Kalman Filter (EKF) computes the orientation of the node.

- The LMS filter is used to predict position and speed.

- IPE is an iterative and cooperative algorithm to compute node positions based on estimates of other nodes’ position.

- A classification algorithm (PCA-based for the first version) is also introduced to estimate a posture according to a library of partial postures (arm, back, legs) proposed by BoWI designers. This classification is distributed among the sensor nodes and central node (the user’s smartphone). The classification is based on posture radio signatures that take advantage of BoWI intrinsic redundancy.

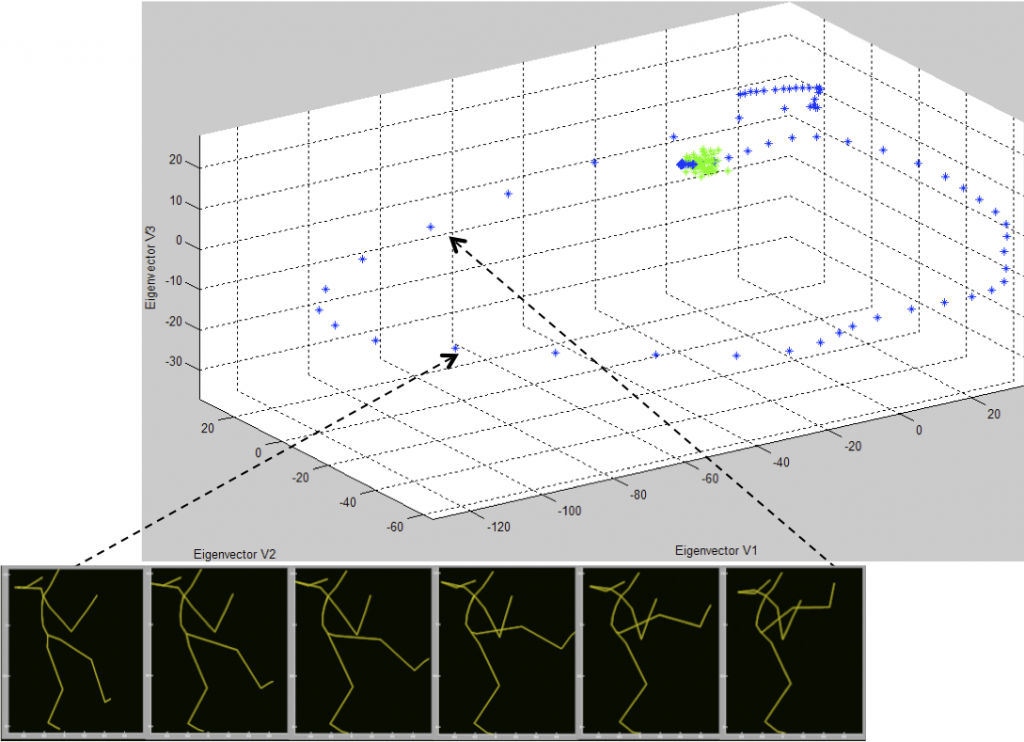

As a relevant example, Figure 3 depicts the results of simulator outputs on a continuous Capoeira movement. The simulator was absolutely necessary to design and refine the sets of algorithms and was also an essential tool to identify opportunities and limitations of the BoWI approach.

Figure 3: Illustration, with a Capoeira movement, of possible signatures to be extracted during a PCA-based classification.

he different algorithms were designed and simulated in the context of three application classes:

- Static Posture recognition based on accelerometer, magnetometer and/or RSSI measurements and classification algorithms (e.g. PCA).

- Applications: Simon Posture Game (repetition of postures), Home Control.

- Gesture Recognition is considered as a temporal sequence of static postures, including sparse use of gyroscopes.

- Applications: Remote (at home) Functional Rehabilitation, Sport gesture monitoring, Musculoskeletal disorders risk monitoring (workplace risk prevention).

- Motion Capture is not based on classification but on orientation determination and 3D geolocation of the nodes.

- Applications: Gesture recording, Video Game, etc.

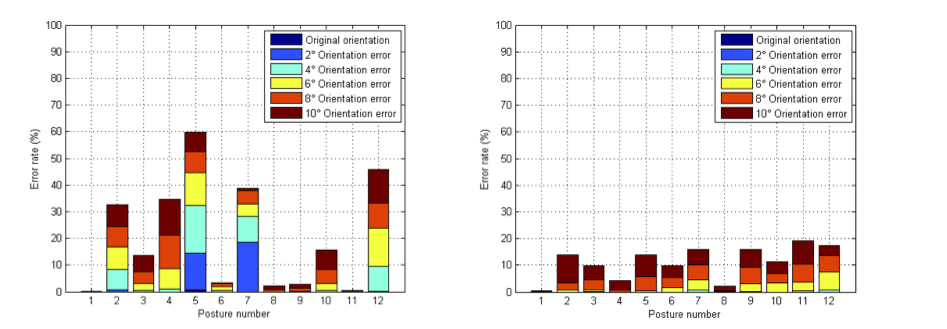

igure 4 shows an example of 12 postures that were used for an application of static posture recognition. Results in Figure 5 represent the error rate for each posture as a function of the orientation error of the user. This orientation error represents the tolerance of the system to recognize inexact postures with a PCA using two eigenvalues with orientation data only (Fig. 5 left) and orientation + RSSI (Fig. 5 right).