This project (Sept. 2022-June 2026) aims at increasing the navigation autonomy of Search-And-Rescue (SAR) drones while preserving their energy autonomy.

This project (Sept. 2022-June 2026) aims at increasing the navigation autonomy of Search-And-Rescue (SAR) drones while preserving their energy autonomy.

This requires improving existing navigation and obstacle-avoidance algorithms already employed on drones. Towards this goal, we advocate the enhancement of sensing and processing tasks through low-energy hardware such as event cameras and Field-Programmable Gate Arrays (FPGAs) and to design navigation and obstacle-avoidance algorithms in a way that capitalizes deep neural network (DNNs) architectures that are adapted to this new hardware. This project will prototype such an integrated system that will be made available to the scientific community to allow further investigations on the opportunities brought by this novel concept of drone architecture.

Proposed investigations for an autonomy through energy-aware low-latency design

Better way of sensing: event cameras

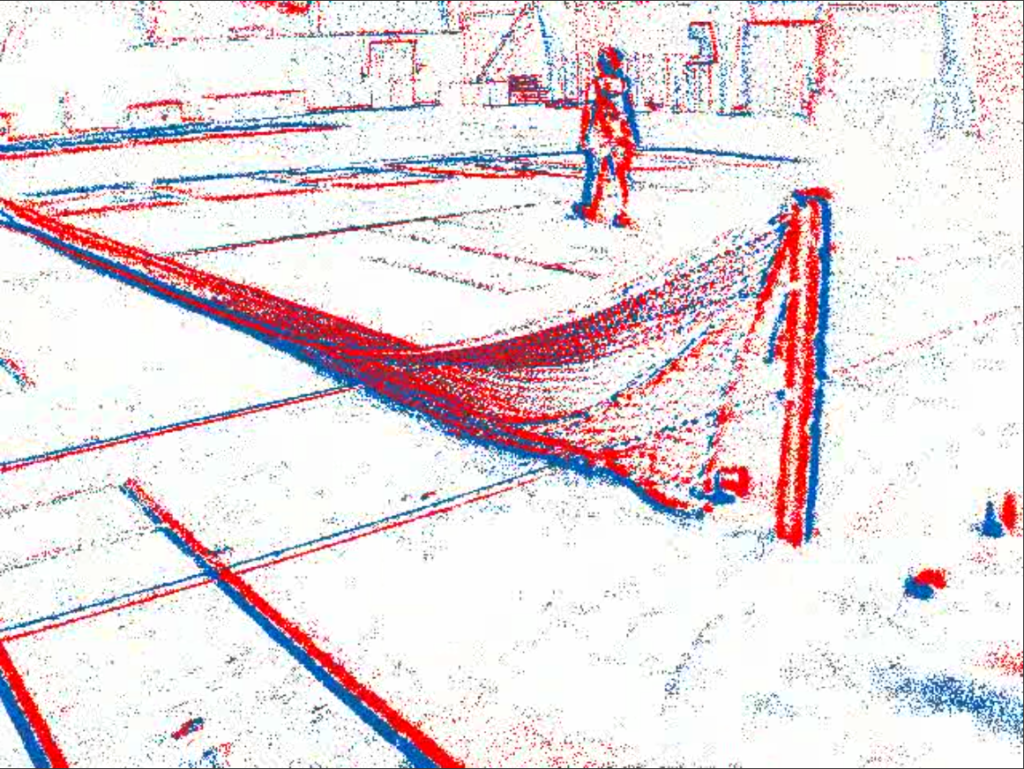

Event cameras represent a better way of sensing than RGB cameras when operating conditions are harsh and latency is at stake. These sensors measure only intensity variations [1]. They offer low latency (approximately 1µs), no motion blur, and a high dynamic range (140dB compared to 60dB for RGB cameras). Additionally, they consume ultra-low power (on average 1mW compared to 1W), which allows for the use of many sensors.

However, using these sensors is not straightforward and raises many questions.

Traditional algorithms can be used with these cameras, but are they the best? Event cameras have asynchronous pixels (events) and do not provide intensity information, only binary intensity changes. Can we combine these cameras with RGB cameras to benefit from sensor fusion?

Event cameras may be ideal for fast motion thanks to their low latency and asynchronous independent sensors, while RGB cameras provide rich information. What task may be deployed on hardware to benefit from the most appropriate sensor, and at which cost?

Finally, how can we train deep neural networks (DNNs) without large datasets? Even though more and more event-based datasets are available, they do not cover all the diverse conditions that SAR drones may encounter during their missions. This may hinder their usage in real-world situations.

[1] Tutorial on Event Cameras by Scaramuzza

Better way of processing: DNNs on FPGA

Implementing DNNs on an FPGA embedded on a drone is feasible [2] and brings many possible trade-offs between accuracy, frame rate, latency and power consumption. Such a system can achieve the same accuracy and frame rate as a GPU-based one but with half to a third of the energy consumption [3]. However, a challenge remains: how can we jointly customize the deep neural networks (DNNs) and FPGA configuration to further reduce energy consumption without losing accuracy when using event cameras? Do we have to design specific DNNs? What is the best methodology? Compressing large and efficient models or directly designing reduced-size models that immediately fit the limited hardware resources? Is there any difference in processing RGB and event information?

[2] KÖVARI, Balint Barna et Emad E BEID (2021). “MPDrone : FPGA-based Platform for Intelligent Real-time Autonomous Drone Operations”. In : 2021 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR).

[3] XU, Xiaowei et al. (2019). “DAC-SDC low power object detection challenge for UAV applications”. In: IEEE Transactions on pattern analysis and machine intelligence.