Watch Chuan’s presentation

Abstract

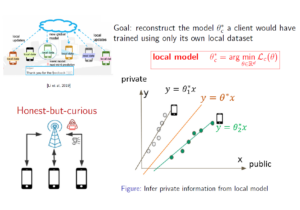

Federated learning (FL) offers naturally a certain level of privacy, as clients’ data is not collected at a third party. However, maintaining the data locally does not provide itself formal privacy guarantees. An (honest-but-curious) adversary can still infer some sensitive client information just by eavesdropping the exchanged messages (e.g., gradients). In this talk, we will present a new model reconstruction attacks for federated learning, where a honest-but-curious adversary reconstructs the local model of the client. The success of this attack enables better performance of other known attacks, such as the membership attack, attribute inference

Federated learning (FL) offers naturally a certain level of privacy, as clients’ data is not collected at a third party. However, maintaining the data locally does not provide itself formal privacy guarantees. An (honest-but-curious) adversary can still infer some sensitive client information just by eavesdropping the exchanged messages (e.g., gradients). In this talk, we will present a new model reconstruction attacks for federated learning, where a honest-but-curious adversary reconstructs the local model of the client. The success of this attack enables better performance of other known attacks, such as the membership attack, attribute inference

attacks, etc. We provide analytical guarantees for the success of this attack when training a linear least squares problem with full batch size and arbitrary number of local steps. One heuristic is proposed to generalize the attack to other machine learning problems. Experiments are conducted on logistic regression tasks, showing high reconstruction quality, especially when clients’ datasets are highly heterogeneous (as it is common in federated learning).

Short bio

Chuan Xu joined Université Côte d’Azur (UCA) as an associate professor (« maître de conférences ») in Sept. 2021 and she is a member of the I3S laboratory and of project-team COATI. Before that, she was a postdoctoral researcher working in the NEO team at Inria Sophia Antipolis from 2018 to 2021. She received her PhD in Computer Science from Université Paris-Saclay in Dec. 2017. Her research interests include : Distributed machine learning, privacy in federated learning and self-stabilizing distributed algorithms.

Chuan Xu joined Université Côte d’Azur (UCA) as an associate professor (« maître de conférences ») in Sept. 2021 and she is a member of the I3S laboratory and of project-team COATI. Before that, she was a postdoctoral researcher working in the NEO team at Inria Sophia Antipolis from 2018 to 2021. She received her PhD in Computer Science from Université Paris-Saclay in Dec. 2017. Her research interests include : Distributed machine learning, privacy in federated learning and self-stabilizing distributed algorithms.

The presentation will be in English and streamed on BBB.