Ys.AI

The Project

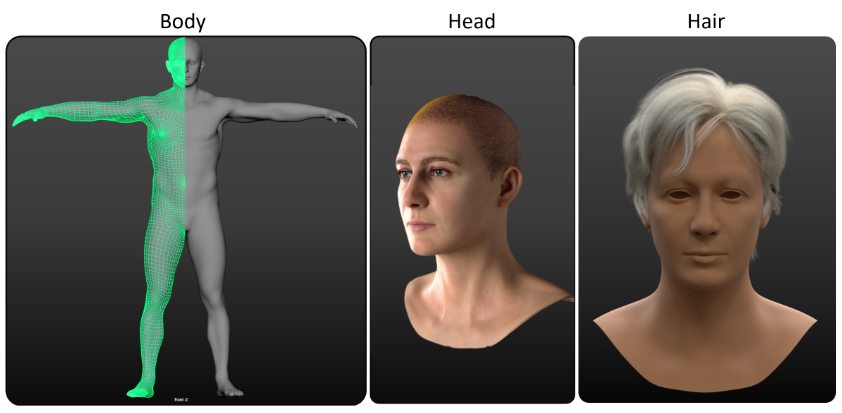

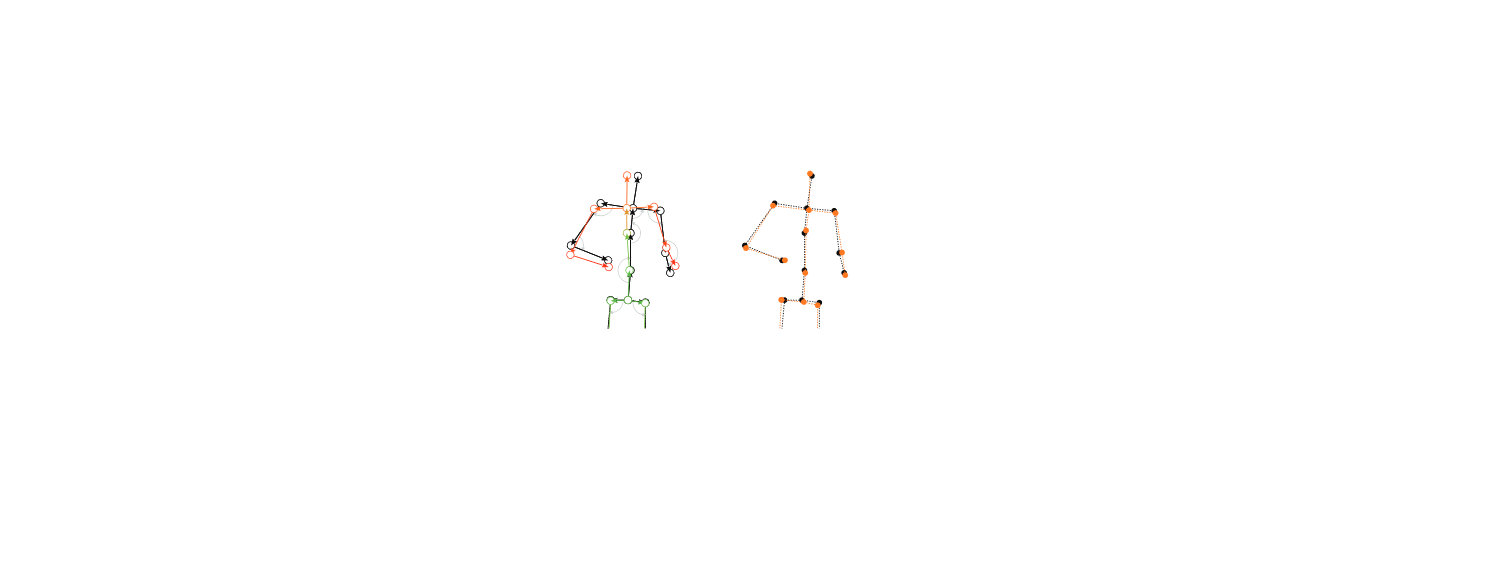

The metaverse became a buzzword following recent announcements about massive investments on those new universes. While it is not a new concept, second life and a few other past attempts opened the door to this new way to communicate. It can be seen as the future of the social and professional immersive communication for the emergent AI-based e-society, and it is based on a large number of technologies (AR/VR/XR, blockchain/NFT, AI, CG, low latency networks, interactions, coding, rendering…).InterDigital foresees high potential in the development of metaverse technologies and associated representation formats. However, the panel of technologies to be addressed is too large and some selection has to be done. One of those, is the representation of the humans within those mixed real and virtual environments and how they interact between each other and with the scene (inc. the objects). The project goal is thus to address this topic with a special focus on the representation formats for digital avatars and their behavior in a digital and responsive environment. It includes the study of deep learning and, more generally, artificial intelligence technologies applied to interactions and animation of avatars and associated representation format(s). The main challenge is to solve the uncanny valley effect to provide users with a natural and lifelike social interaction between real and virtual actors, leading to full engagement in those future metaverse experiences.

Some Partners

Inria

- MimeTIC (Rennes): Adnane Boukhayma, Franck Multon

- VIRTUS (Rennes): Ludovic Hoyet, Julien Pettré

- MORPHEO (Grenoble): Stefanie Wuhrer, Edmond Boyer

- HYBRID (Rennes): Ferran Argelaguet, Anatole Lécuyer

InterDigital

- InteractiveMedia (Rennes): Philippe Guillotel, Quentin Avril, Quentin Galvane, François Le Clerc

- SympAI (Rennes): Pierre Hellier, Francois Schnitzler