ICAV

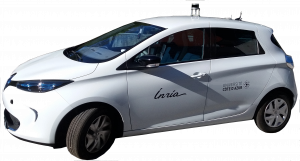

ICAV is an Intelligent and Connected Autonomous Vehicle. It is composed of a Renault ZOE robotized by Ecole Centrale of Nantes (by the team setup by Philippe Martinet in LS2N/ARMEN).

Figure: ICAV plateform and its web interface

The robotization allows to have access to the control of:

- Steering angle (or steering torque)

- Braking torque

- Acceleration

- Gear box

- Blinking light

In its original version, it is composed of embedded sensors:

- Car odometry and Velocity

- Low cost GPS (Ublox 6)

- Low cost IMU

- Lidar VLP16 from Velodyne

- Two front cameras in the bumper

- One rear camera in the bumper

and one embedded computer, with a web interface connected to a simple tablet. All the equipments are connected to the existing comfort battery.

This equipment has been funded by UCA (Digital Reference Centre) and delivered late 2018.

In addition, in the framework of a collaboration between CHORALE and LS2N/ARMEN one global application of Mapping/Localization/Navigation/Parking is installed in the vehicle. This application is using LIDAR VLP16 based mapping algorithm developped in Nantes including the last two years collaboration work between CHORALE and ARMEN. In January 2019, we have done the map of the Inria Sophia Antipolis Center, and other places of Sophia Antipolis. On all places, it is possible to localize the vehicle, register a path and then proceed to autonomous navigation (if we obtain the authorization to make it). Fast prototyping tools environment called ICARS is available for both simulation and development purposes.

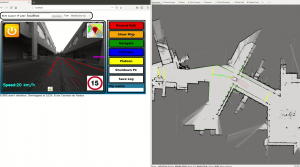

In december 2019, we have evaluated te navigation algorithm on the new experimental site made available by CASA.

Figure: CASA experimental site in Sophia Antipolis

DRONIX

In 2019, we have defined and installed a capture motion system composed of 6 cameras coming from the QUALISYS company. This system allows to track and localize a multi robot system.

Figure: DRONIX plateform

In our applications, we will consider the use of UAVs and possibly the collaboration between UAVs and AGV. The DRONIX platform will be used for real time navigation, and as a ground truth system. The system has a central computer and each robots will have possible access to the global information by wifi.

Hannibal

We have an indoor mobile robot (called Hannibal) equiped with a 360 degree RGB-D vision system composed of 8 kinects. It is working using ROS.

PERCEPTION360

Perception360 is an integration software platform for all perception developments in the Inria CHORALE team.

All functions have been coded in a modular and scalable ROS environment by including a generic model to take into account the different sensors (monocular perspective vision (RGB), vision stereo perspective (RGB-D), spherical vision (RGB and RGB-D).

As an integration software platform, one can find:

-

- Image acquisition (for a set of exsiting sensors)

- Sensor calibration (for a set of exsiting sensors)

- Image registration (in a common spherical RGB/RGB-D Perception 360 format

- Visual odometry

- Localization

- Key frame based Mapping

The main application concerns representation of the environment (multi-layers topological and spherical representation of the environment), Localization, SLAM and Navigation.

Figure: PERCEPTION360