Research Interests

- Symbolic Music information processing

- Quantitative extensions of formal language models

- Structured representations of Music notation

- Hierarchical representations of digital music scores

- Prior languages of music notation style

- Search and Retrieval in symbolic musical content

- Similarity metrics and edit-distances

Research Topics

Automated Music Transcription

Trained musicians, while listening to music performances, are able to transcribe them into Common Western music notation. We are studying computational methods to automate this process by parsing an input sequence of timed music events (a MIDI file) into a structured music score in a format such as XML/MEI. Our approach is based on two main ingredients.

First, we use quantitative language theoretical models and techniques, in order to

- represent the notation style aimed for the output score, by means of weighted tree grammars,

- compute a distance between output sequence and output notation, with weighted pushdown transducers,

- compose the above weighted models, and apply optimization algorithms in order to extract the best (weighted) parsing solutions.

Second, we created an abstract Intermediate Representation of music scores, hierarchical, that can be built from the (parse-trees) returned by the above parsing algorithms, and exported into XML score formats. The design of this IR is a research topic per se, described below.

This method enables to study of theoretical foundations for the problem of music transcription, and it instantiates into several case studies, such as the following.

Monophonic transcription

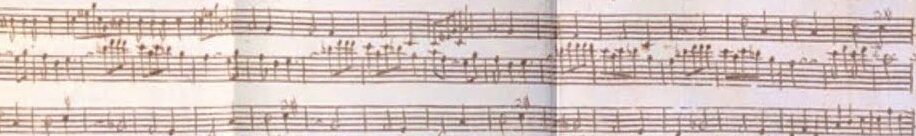

In the case of melodies with at most one note sounding at a time, we were able to improve the level of complexity and details of transcriptions, in particular for handling ornaments and rests, as illustrated in these examples.

The above examples were taken from a dataset made of about 300 extracts from the classical repertoire, with increasing rhythmic and melodic complexity, provided as XML (scores) and MIDI (performance) files. We have built this dataset for the evaluation and training of our tools. It shall also be useful to the community as a landmark for the evaluation of the transcription of complex melodies.

Bassline transcription

In collaboration with John Xavier Riley from C4DM at Queen Mary University London, we are working on an approach for end-to-end transcription of jazz basslines, following a 2-steps workflow: a MIDI file is first extracted from audio recordings of jazz standards, with source separation, pitch, and onset estimation techniques, and our transcription tools are then applied as a back-end to provide a music score. Significant improvements have been made to the technics developed in order to deal with the swing and the particular pitch-spelling issues (see below) corresponding to the case of jazz. Some preliminary results may be found here and here.

Drum transcription

The output of electronic drumkits can be recorded into MIDI files. Google Magenta provided a dataset of more than 13 hours of MIDI recordings of drummers on a Roland TD-11 kit. After some successful first experiments presented in these international workshop and conference, we are now conducting a effort for the transcription of this dataset into drum scores, based on our tools. This work, in the context of the thesis of Lydia Rodriguez-de la Nava, benefits from the expertise of Martin Digard, a professional drummer holding a Master’s degree in Natural Language Processing from INALCO. It is also the first real-case application of our transcription approach to a polyphonic instrument.

Piano transcription

Scaling up from monophonic to polyphonic instruments is a difficult step in the context of transcription. This is one of the main topics of the thesis of Lydia Rodriguez-de la Nava. She is developing voice-separation algorithms for this purpose and integrating them into our transcription framework and models. This work is helped by the use of the dataset ASAP, made of linked piano scores and MIDI performances, published in collaboration with Francesco Foscarin and Andrew Mc Leod.

Music Score Model

We are developing an abstract Intermediate Representation of music scores, for various Music Information Retrieval problems such as transcription or music score analysis. It enables us to deal with various score file formats (XML or plain text) in input or output, to reason on and apply transformations to music content without the hassle of decoding these formats.

The main originality of our model is its tree structure, even at low levels (e.g. for the description of rhythms). A salient feature of music notation is indeed its hierarchical nature: events are grouped into bars, tuplets, and under beams, and durations are defined proportionally and composed with ties and dots.

This model is used in particular in our transcription procedures, for post-processing transformations, by term rewriting of the scores obtained. It was also used for training automata models on corpora of digital music scores.

Voice separation

During her thesis, Lydia Rodriguez-de la Nava is developing algorithms for the separation of polyphonic music content into voices, differentiating the different melodies, or chords, that we are able to perceive by listening. This problem is crucial in the context of music transcription, to produce easy-to-read scores, where the lines of melodies, accompaniments, bass lines, etc., are clearly represented.

She proposes a voice separation algorithm, which is based on principles of perception and is flexible with respect to

the genre of music and the instrument. It works, roughly, by searching a shortest path in a graph whose vertices are partitions of events into voices at every date. Some rules are defined in order to associate a cost to every such vertex, and another cost to transitions between vertices (at successive dates). This algorithm is evaluated on datasets of the Music 21 toolkit and on our dataset ASAP.

Pitch Spelling

The height of music notes (pitch) is represented in the MIDI standard by the corresponding number of piano keys (from 1 to 88, one semitone for each). In CW music notation, however, it is described by a note name (the distance between names is one tone or one semitone) and an optional modifier, which is a positive or negative number of semitones (represented by sharp or flat symbols). Therefore, several notations are possible for each piano key (for instance C# or Db for 73). Choosing appropriate ones is not obvious as it depends on context (direction, tonal context…).

We have been proposing two approaches for the joint estimation of pitch spelling and key signatures from MIDI files.

- A first procedure, pkspell, is data-driven, based on the training of a deep recurrent neural network model on the ASAP piano dataset. It has been evaluated on ASAP and the MuseData dataset dedicated to pitch spelling.

- A second more recent procedure is algorithmic, based on Dynamic Programming techniques for minimizing the number of symbols in notation. It is currently under evaluation.

Melodic similarity evaluation

We are studying metrics of similarity between melodies, defined as edit distances for character strings (ED) and labeled trees (TED). In particular, with Mathieu Giraud (Algomus team, Lille), we have conducted an in-depth theoretical study on the computability of an ED introduced in 1990 by Mongeau and Sankoff and widespread in the Music Information Retrieval community.

Moreover, we are developing solutions for comparing digital music score files. This cannot be achieved reliably by running a simple Unix diff procedure on the two (XML) text files, due to the ambiguity and verbosity of the score formats (without mentioning incompatibilities between different formats, different versions of the same format and files produced by different software). With Francesco Foscarin, we have proposed a procedure based on an ad-hoc similarity metric combining several ED and TED, and much more involved than for the case of text files (Unix diff utility), because of the complex structure of music scores. This approach has been presented in this conference and this conference. It is currently used in a case study on Crowdsourced Correction of Optical Music Recognition output for a musicological collection with IReMus lab.

Digital music score collections

All the above research topics find application in the constitution, management, and search and retrieval of databases of music scores, for cultural heritage preservation and study.