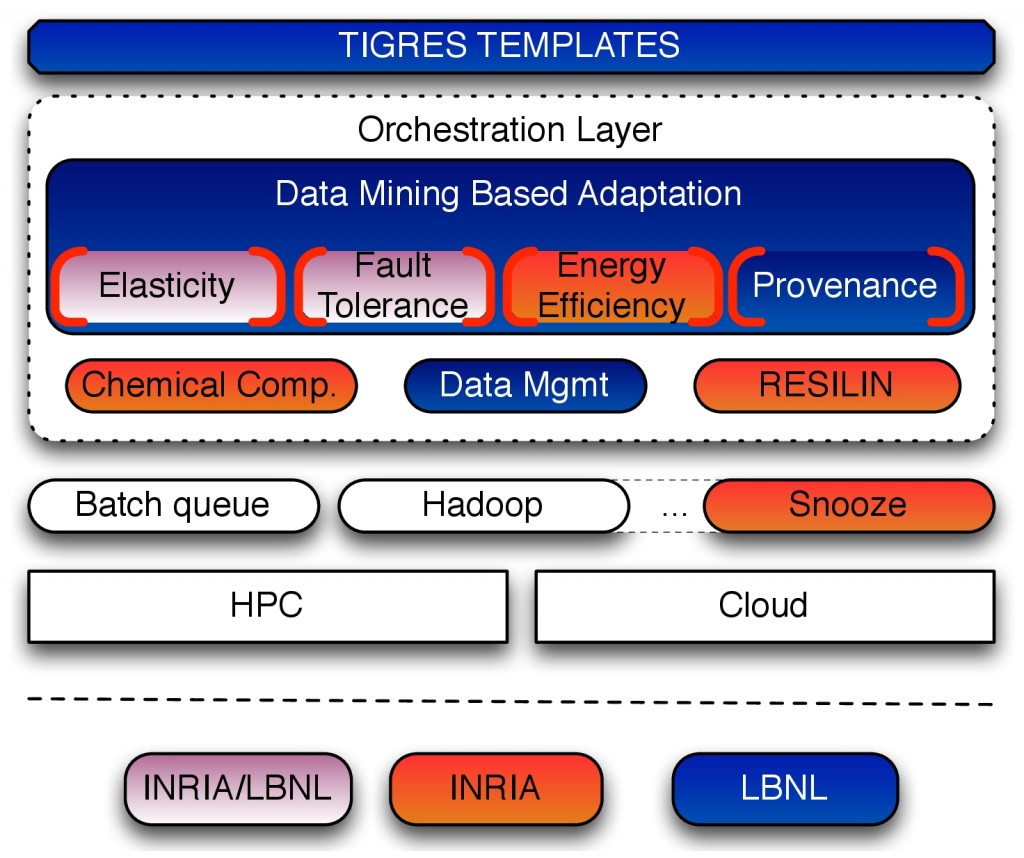

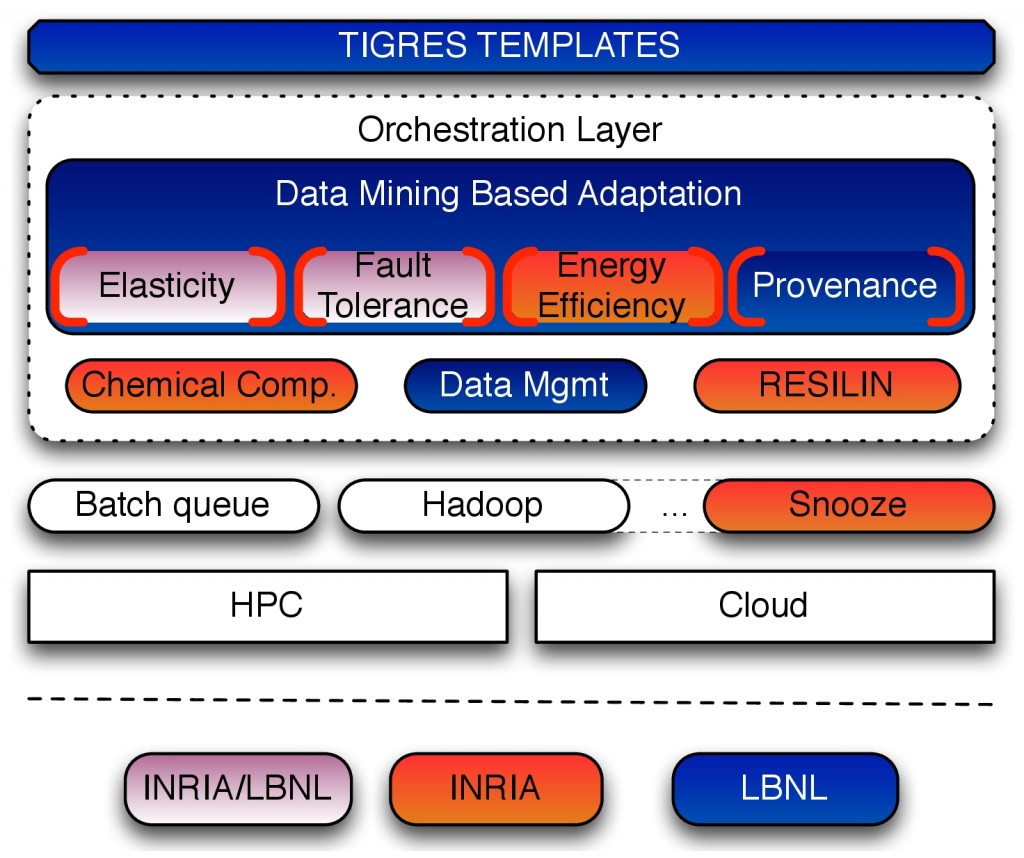

The objective of the first 3 years of the collaboration was to create a software ecosystem to facilitate seamless data analysis across desktops, HPC and cloud environments. Specifically, our goal was to build a dynamic software stack that is user-friendly, scalable, energy-efficient and fault tolerant.

Research areas

- A programming environment for scientific data analysis workflows. An integrated capability that will allow users to easily compose their workflows in a programming environment such as Python and execute them on diverse high-performance computing (HPC) and cloud resources.

- An adaptive orchestration layer. An orchestration layer for coordinating resource and application characteristics. The adaptation model will use real-time data mining to support elasticity, fault-tolerance, energy efficiency and provenance.

- Infrastructure support for HPC, clusters and cloud systems. Research that will determine how to provide execution environments that allow users to seamlessly execute their dynamic data analysis workflows in various research environments and scales.

|