Authors: Tom Bachard and Thomas Maugey

Abstract:

Coding algorithms usually aim to faithfully reconstruct images, which limits the expected compression gain. However, in some cases, such as cold data, more drastic compression ratio must be targeted, which may lead to not reconstructing the inputs pixel-wise. In this work, we propose a generative compression pipeline, made of a foundation model as the semantic encoder and a diffusion model as the image generator. We also propose a way to evaluate generated images with this pipeline, because classical MSE is not relevant here. Finally, we test the effects of quantization on the semantic encoder’s latent space, hopping for semantic quantization.

Supplementary results:

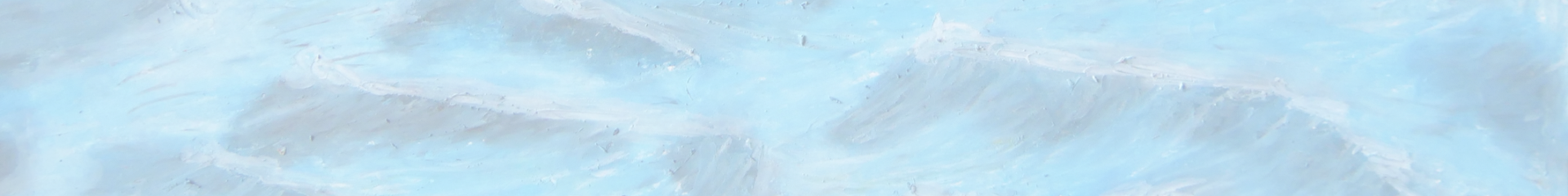

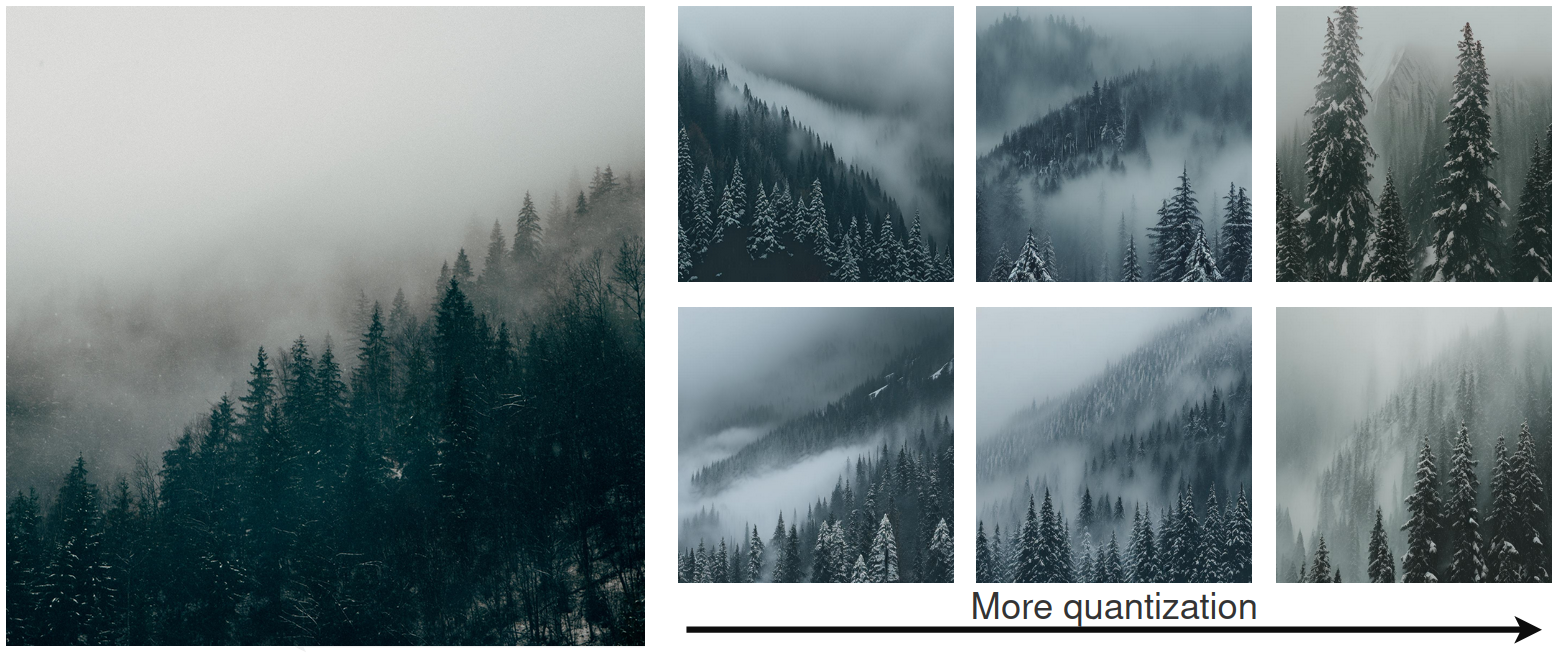

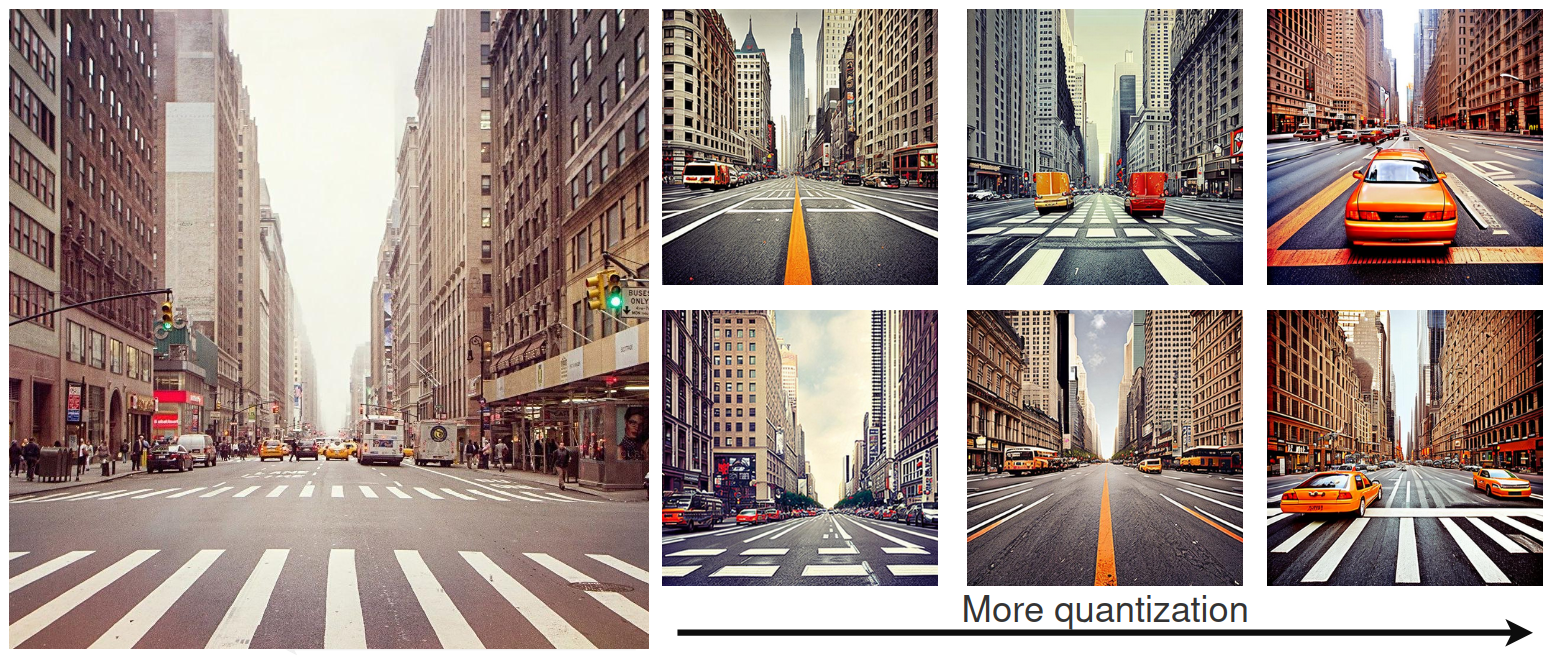

Generated images from CLIP representation

A snowy forest

A New York street

A stadium crowd

A concert crowd

A bird in a tree