We have postdoc & starting researcher positions available, check here.

Introduction

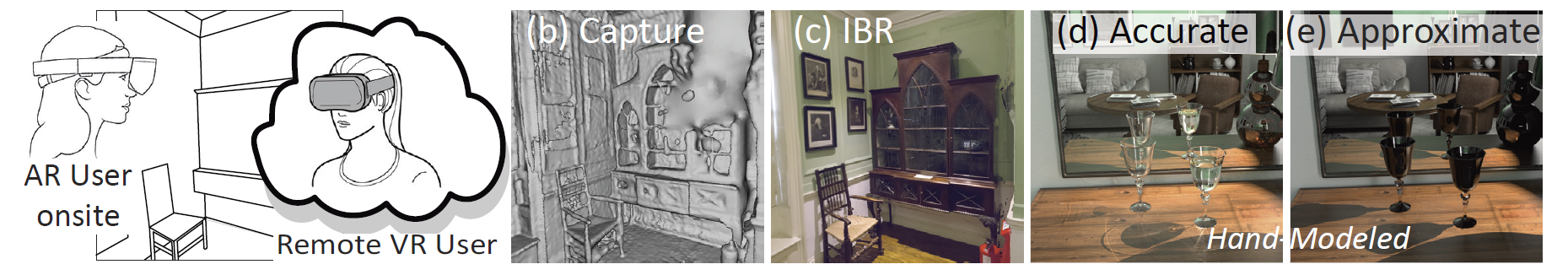

Recent years have seen an explosion in the use of 3D Computer Graphics (CG) in Virtual and Augmented Reality (AR/VR), games, film, training or architecture; the emergence of cheap and powerful head-mounted displays has amplified this trend. Consider the use case below, where an on-site AR user is redecorating a room in collaboration with a remote VR user [Sco16]. Such rapidly emerging applications need algorithms that produce highly realistic interactive CG renderings at unprecedented scales and scope. Addressing these demands requires us to rethink the foundations of our field, to overcome fundamental limitations in CG.

Capture methods e.g., from depth sensors can provide a model (b), but it is uncertain, i.e., inaccurate and incomplete. This can be rendered in the remote VR headset using Image-Based Rendering (IBR) (c) but there is no flexibility to modify the scene, such as adding objects or changing lighting. Such flexibility requires manually-designed content which is extremely time-consuming and expensive to create (d-e). High-quality accurate rendering (d) uses expensive simulation to compute global illumination effects; While many fast, GPU-based approximate (e) rendering algorithms have been developed, they have no guarantee on accuracy and often miss important visual effects, e.g., light paths reflecting off the mirror and refracting through glass.

We see that accurate [PJH16], approximate [RDGK12] and image-based rendering (e.g., [CDSHD13,HRDB16] algorithms each have largely incompatible but often complementary tradeoffs in quality, speed and flexibility. In addition, the use of captured content is only possible with significant manual labor for methods other than IBR. There is currently no way to exploit the benefits of each method together, nor the constantly expanding wealth of captured content in a single algorithm. To address these problems we revisit the foundations of Computer Graphics rendering, so that the advantages of these disparate methods can be used together in a new unified algorithms. Our solutions will introduce the treatment of uncertainty as the missing ingredient to achieving this goal. Uncertainty arises naturally with captured data: as sensor noise, or uncertainty in 3D geometry reconstruction. Interestingly, the central notion of variance in accurate Monte-Carlo rendering can be seen as uncertainty in the rendering process. This previously unidentified link suggests uncertainty as a promising tool to address the limitations of CG. Estimating uncertainty for approximate algorithms, and propagating uncertainty from input content to rendering algorithms has never been considered before, possibly due to the very different methodologies of the approaches involved.

Methodology

We propose a new methodology by introducing rendering and input uncertainty. We define output or rendering uncertainty Uo, as the expected error of a rendering solution over the parameters and algorithmic components used with respect to an ideal image, and input uncertainty Ui as the expected error of the content over the different parameters involved in its generation, compared to an ideal scene being represented. Here the ideal scene is a perfectly accurate model of the real world, i.e., its geometry, materials and lights; the ideal image is an infinite resolution, high-dynamic range image of this scene.

By introducing methods to estimate rendering uncertainty we will quantify the expected error of previously incompatible rendering components with a unique methodology for accurate, approximate and image-based renderers. This will allow FunGraph to define a unified renderer that can exploit the advantages of these very different approaches in a single algorithmic framework, providing a fundamentally different approach to rendering. A key component of this solution is the use of captured content: we will develop methods to estimate input uncertainty and to propagate it to the unified renderer, allowing this content to be exploited by all rendering approaches.

Estimating and propagating these uncertainties is hard: it is difficult to determine the domain and to sample parameters for the processes involved and computing these uncertainties involves a large number of evaluations of complex rendering or reconstruction operations. We will use three promising methodological tools: i) generating and using extensive synthetic (but also captured) ground truth data, that allows us to estimate uncertainties based on high-quality images and the corresponding scene data, ii) methods and terminology of Uncertainty Quantification (UQ) [Iac09, Smi13] which inspires us to develop effective solutions and iii) advances in machine learning, and Bayesian Deep Learning [KG17, GG16] in particular, that provide ways to exploit the power of modern inference methods and simultaneously estimate uncertainty.

The goal of FunGraph is to fundamentally transform computer graphics rendering, by providing a solid theoretical framework based on uncertainty to develop a new generation of rendering algorithms. These algorithms fully exploit the spectacular – but previously disparate and disjoint – advances in rendering, and benefit from the enormous wealth offered by constantly improving captured input content.

References

- [CDSHD13] Gaurav Chaurasia, Sylvain Duchene, Olga Sorkine-Hornung, and George Drettakis. Depth synthesis and local warps for plausible image-based navigation. ACM Trans. on Graphics, 32(3), 2013.

- [GG16] Yarin Gal and Zoubin Ghahramani. Dropout as a Bayesian approximation: Representing model uncertainty in deep learning. In international conference on machine learning, pages 1050-1059, 2016.

- [HRDB16] Peter Hedman, Tobias Ritschel, George Drettakis, and Gabriel Brostow. Scalable inside-out image-based rendering. ACM Transactions on Graphics (TOG), 35(6):231, 2016.

[Iac09] Gianluca Iaccarino. Quantification of uncertainty in flow simulations using probabilistic methods. Techni- cal report, NATO Research & Technology, France, 2009. - [KG17] Alex Kendall and Yarin Gal. What uncertainties do we need in Bayesian deep learning for computer vision? arXiv preprint arXiv:1703.04977, 2017.

- [PJH16] Matt Pharr, Wenzel Jakob, and Greg Humphreys. Physically based rendering: From theory to implemen- tation. Morgan Kaufmann, 2016.

- [RDGK12] Tobias Ritschel, Carsten Dachsbacher, Thorsten Grosch, and Jan Kautz. The state of the art in interactive global illumination. In Computer Graphics Forum, volume 31, pages 160-188. Wiley Online Library, 2012.

- [Sco16] VR Scout. Microsoft wants to power virtual reality headsets, 2016. https://vrscout.com/news/ microsoft-to-power-virtual-reality-headsets/.

- [Smi13] Ralph C Smith. Uncertainty quantification: theory, implementation, and applications, volume 12. Siam, 2013.

ERC Advanced grant number 788065 (FUNGRAPH)