Some code and data related to tasks supported in the Semapolis project have been made publicly available. They are listed below.

Object Recognition and Detection

- Category-Agnostic Bounding Box Proposals (AttractioNet). Spyros Gidaris and Nikos Komodakis have develop a CNN-based method to make bounding box proposals in images, which do not depend on a specific object category [Gidaris & Komodakis BMVC 2016]. The approach has been extensively evaluated on several image datasets (i.e. COCO, PASCAL, ImageNet detection and NYU-Depth V2 datasets) reporting on all of them state-of-the-art results that surpass the previous work in the field by a significant margin and also providing strong empirical evidence that the approach is capable to generalize to unseen categories. Code is available from a git repository (https://github.com/gidariss/AttractioNet).

- Object Detection based on a Multi-region and Semantic Segmentation-Aware CNN Model. Spyros Gidaris and Nikos Komodakis have develop a CNN-based method for accurately localizing objects in images [Gidaris & Komodakis ICCV 2015]. It relies on a multi-region deep convolutional neural network (CNN) that also encodes semantic segmentation-aware features. It improves other published work by a significant margin on the detection challenges of PASCAL VOC2007 and PASCAL VOC2012. Code is available from a git repository (https://github.com/gidariss/mrcnn-object-detection/).

- Improvement of Detection Location Accuracy (LocNet). Spyros Gidaris and Nikos Komodakis have develop a CNN-based method for improving the location accuracy of object detectors in images [Gidaris & Komodakis CVPR 2016]. It achieves a very significant improvement on the mAP for high IoU thresholds on PASCAL VOC2007 test set and it can be very easily coupled with recent state- of-the-art object detection systems, helping them to boost their performance. Code is available from a git repository (https://github.com/gidariss/LocNet/).

- Dynamic Few-Shot Visual Learning without Forgetting. Spyros Gidaris and Nikos Komodakis have develop a CNN-based method to efficiently learn novel categories from only a few training data while at the same time not forget the initial categories on which the network was trained [Gidaris & Komodakis CVPR 2018]. It improves the state of the art with Mini-ImageNet on few-shot recognition while not sacrificing any accuracy on the base categories, which is a characteristic that most prior approaches lack. It also achieve state-of-the-art results on Bharath and Girshick’s few-shot benchmark. Code is available from a git repository (https://github.com/gidariss/FewShotWithoutForgetting).

- Unsupervised Representation Learning by Predicting Image Rotations (FeatureLearningRotNet). Spyros Gidaris, Praveer Singh and Nikos Komodakis have develop a CNN-based method to learn image features without supervision, by training ConvNets to recognize the 2D rotation that is applied to the image that it gets as input [Gidaris et al. ICLR 2018]. This method significantly closes the gap between supervised and unsupervised feature learning. On PASCAL VOC 2007 detection task, it achieves the state of the art among unsupervised methods, only 2.4 points lower from the supervised case. Similar striking results are obtained when transferring these unsupervised learned features on various other tasks, such as ImageNet classification, PASCAL classification, PASCAL segmentation, and CIFAR-10 classification. Code is available from a git repository (https://github.com/gidariss/FeatureLearningRotNet).

Urban Image Dataset with Semantic Segmentation

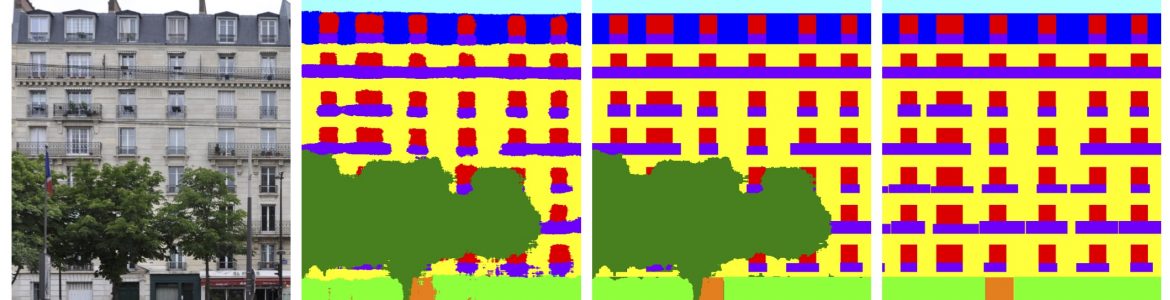

- Semantic Dataset of Art-Deco Facades, with Occlusion. Raghudeep Gadde has developed a dataset consisting of 80 images of Parisian buildings with Art-Deco style, along with semantic annotations representing the following classes: door, shop, balcony, window, wall, sky, roof. It has been augmented with synthetic tree occlusions (both in images and annotations). Data is available from a git repository (https://github.com/raghudeep/ParisArtDecoFacadesDataset/). This dataset has been used both to evaluate facade image parsers [Kozinski et al. CVPR 2015] and to learn shape grammars [Gadde et al. IJCV 2016].

Semantic Segmentation of Urban Images, 3D Point Clouds and Meshes

- 2D and 3D Facade Segmentation. Raghudeep Gadde, Varun Jampani, Renaud Marlet and Peter Gehler have developed a method for semantizing building facades in 2D (images) or 3D (point cloud ou mesh) [Jampani et al. WACV 2015, Gadde et al. PAMI 2018]. Code and experimental data is available from the project page (https://ps.is.tuebingen.mpg.de/research_projects/facade-segmentation).

- Fast Algorithms to Learn Piecewise-Constant Functions on General Weighted Graphs. Loïc Landrieu and Guillaume Obozinski have developed a method to efficiently solve problems penalized respectively by the total variation on a general weighted graph and its l0 counterpart, the total level-set boundary size, when the piecewise-constant solutions have a small number of distinct level-sets, which is the case for semantic segmentation [Landrieu & Obozinski SIIMS 2017]. This approach can then be used in methods to efficiently semantize a urban point cloud, as in [Guinard & Landrieu ISPRS Archives 2017, Landrieu et al. ISPRS P&RS 2017, Landrieu & Simonosky CVPR 2018]. Code is available from a git repository (https://github.com/loicland/cut-pursuit).

Urban Procedural Modeling

- Interactive Sketching of Urban Procedural Models. Gen Nishida, Ignacio Garcia-Dorado, Daniel G. Aliaga, Bedrich Benes and Adrien Bousseau have developed a method based on procedural models and machine learning to interactively create buildings using only raw sketches [Nishida et al. TOG 2016]. Related code is available from the project page (https://www.cs.purdue.edu/homes/gnishida/sketch/).

Extraction and Understanding of Features Representative of Urban Scenes

- Repetitive Structure Detection in Images. Akihiko Torii, Josef Sivic, Masatoshi Okutomi and Tomas Pajdla have developed a method to detect groups of repeated features in images and used it for visual place recognition, typically based on repeated structures in building facades [Torii et al. PAMI 2015]. Code is available from the project page (http://www.ok.ctrl.titech.ac.jp/~torii/project/repttile/) and data is available on request.

- View Synthesis to Improve Place Recognition. Akihiko Torii, Relja Arandjelovic, Josef Sivic, Masatoshi Okutomi and Tomas Pajdla have developed a method to improve place recognition in cities given a simple photograph [Torii et al. CVPR 2015, Torii et al. PAMI 2017]. This method, which is robust to strong variations of illumination at different time of the day, is based on view synthesis. Code and data are available from the project page (http://www.ok.ctrl.titech.ac.jp/~torii/project/247/).

- Understanding deep features. Mathieu Aubry and Bryan Russell have developed a method for understanding deep features using computer-generated imagery [Aubry & Russell ICCV 2015]. This method, which separate dimensions such as viewpoint or style, has been experimented on vehicles and furniture. Code and data are available from the project page (http://imagine.enpc.fr/~aubrym/projects/features_analysis/).

- Capture of Location-Dependant Image Features (NetVLAD). Relja Arandjelovic, Petr Gronat, Akihiko Torii, Tomas Pajdla and Josef Sivic have developed a CNN-based method for learning in a weakly supervised setting how to produce image descriptors that are representative of the place where images are taken, and they have used it for place recognition in an image retrieval fashion [Arandjelovic et al. CVPR 2016, Arandjelovic et al. PAMI 2017]. The network learned on street images capture strong urban features. Code and data are available from the project page (http://www.di.ens.fr/willow/research/netvlad/).

2D-2D and 2D-3D Alignment and Detection

- 2D Geometric Matching. Ignacio Rocco, Relja Arandjelovic and Josef Sivic have developed a CNN-based method for determining correspondences between two images in agreement with a geometric model such as an affine or thin-plate spline transformation, and estimating its parameters [Rocco et al. CVPR 2017]. The network parameters can be trained from synthetically generated imagery without the need for manual annotation. The same model can perform both instance-level and category-level matching giving state-of-the-art results on the challenging Proposal Flow dataset. Code is available from the project page (http://www.di.ens.fr/willow/research/cnngeometric/).

- Alignment of 3D Models of Architectural Sites to Photographic and Non-Photographic Imagery. Mathieu Aubry, Bryan Russell and Josef Sivic have developed a method for aligning 3D models of architectural sites to photographic and non-photographic imagery such as paintings and drawings [Aubry et al. TOG 2014, Aubry et al. chapter LSVG 2015]. Associated code and data (painting images and 3D models to align them on) are available from the project page (https://www.di.ens.fr/willow/research/painting_to_3d/).

- Recognition of Furniture. Mathieu Aubry, Daniel Maturana, Alexei A. Efros, Bryan Russell, Josef Sivic have developed a method for recognizing furniture items such as chairs, and predicting their approximate semantic 3D models [Aubry et al. CVPR 2014]. It is applicable to urban furniture. Corresponding code and data is available from the project page (http://www.di.ens.fr/willow/research/seeing3Dchairs/).

- Detection in 2D images of 3D objects in database. Francisco Massa, Bryan Russell and Mathieu Aubry have developed a method for detecting objects of a 3D database in images [Massa et al. CVPR 2016]. The method is based on CNNs and an adaptation from real to rendered views. Code is available from the project page (http://imagine.enpc.fr/~suzano-f/exemplar-cnn/).

- Viewpoint Estimation. Francisco Massa, Renaud Marlet and Mathieu Aubry have developed a method to estimate the viewpoint objects in images [Massa et al. BMVC 2016]. It is based on a joint training method with the detection task. It improves the mAVP by a significant margin over previous state-of-the-art results on the Pascal3D+ dataset. Code is available from the project page (http://imagine.enpc.fr/~suzano-f/bmvc2016-pose/).

High-level 3D Reconstruction

- Shape Merging. Alexandre Boulch and Renaud Marlet have developed a robust, statistical method for deciding whether or not two surfaces (e.g., meshes or primitives detected in a point cloud) are to be considered the same, and thus can be merged [Boulch & Marlet ICPR 2014]. It can be used to abstract geometric shapes from a 3D point cloud or mesh, such as planes or spheres, which are prevalent in a urban environment. Code for shape merging is available from a git repository (https://github.com/aboulch/primitive_merging).