The Semapolis project has produced scientific results at three levels: methodologies (new learning methods), computer vision and computer graphics tasks (recognition, detection, segmentation, reconstruction, rendering), and applications (specialization and demonstration on semantic and 3D city modeling). Many of the publications come with code and data, with supporting experiments involving urban data.

Learning, with Weak, Little or No Supervision

Over the last years, Convolutional Neural Networks (CNNs) have transformed the field of computer vision thanks to their unparalleled capacity to learn high-level semantic image features. However, to successfully learn those features, they usually require massive amounts of manually labeled data, which is both expensive and impractical to scale. Therefore, semantic feature learning with little or no supervision is of crucial importance in order to successfully harvest the vast amount of visual data that are available today.

Weakly Supervised Learning. Successful methods for visual object recognition are typically trained on datasets containing lots of images with rich annotations, which are both expensive to create and subjective. [Oquab et al. CVPR 2015] propose a weakly-supervised CNN for object classification that relies only on image-level labels, yet can learn from cluttered scenes containing multiple objects. It performs comparably to its fully-supervised counter-part.

Semi-Supervised Learning. [Koziński et al. NeurIPSw 2017] propose an approach based on a Generative Adversarial Network (GAN) for the semi-supervised training of structured-output neural networks.Initial experiments in image segmentation demonstrate it has the same performance as in a fully supervised scenario, while using two times less annotations.

Low-Shot Learning. [Gidaris & Komodakis CVPR 2018] define an attention-based few-shot visual learning system that, during test time, is able to efficiently learn novel categories from only a few training data while at the same time not forgetting the initial categories on which it was trained.

Unsupervised Feature Learning. [Gidaris & Komodakis ICLR 2018] propose to learn image features by training CNNs to recognize the 2D rotation that is applied to the image that it gets as input. They demonstrate that this apparently simple task actually provides a very powerful supervisory signal for semantic feature learning, yielding state-of-the-art performance in various unsupervised feature learning benchmarks (for recognition, detection, segmentation), only a few points lower from the supervised case.

Unsupervised Learning & Architecture Style Discovery. [Lee et al. ICCP 2015] explore whether visual patterns can be discovered automatically in the particular domain of architecture, using huge collections of street-level imagery. They find visual patterns that correspond to semantic-level architectural elements distinctive to specific time periods. This analysis allows both to date buildings, as well as to discover how functionally-similar architectural elements (e.g. windows, doors, balconies, etc.) have changed over time due to evolving style.

Domain Adaptation for Unsupervised Learning from Synthetic Data. [Massa et al. CVPR 2016] shows how to map features from real images and features from CAD-rendered views into the same feature space, thus allowing training on a large amount of synthetic data, without the need for manual annotation, i.e., without supervision.

CNN Understanding. To help understanding the « black box » of a neural network, [Aubry & Russell ICCV 2015] introduce an approach for analyzing the variation of features generated by CNNs w.r.t. scene factors that occur in natural images, including object style, 3D viewpoint, color, and scene lighting configuration.

Object Detection

One of the main basic task in scene understanding is object detection, which requires both recognizing objects and localizing them in an image.

Accurate Localization. [Gidaris & Komodakis ICCV 2015] propose an accurate object detection system that relies on a multi-region CNN that encodes semantic segmentation-aware features capturing a diverse set of discriminative appearance factors to enhance localization sensitivity. [Gidaris & Komodakis CVPR 2016] show how to boost the localization accuracy of object detectors, with a probabilistic estimation of the boundaries of an object of interest inside a region. It can achieve high detection accuracy even when it is given as input a set of sliding windows, thus proving that it is independent of box proposal methods.

Objectness. More generally, many computer vision tasks rely on category-agnostic bounding box proposals. [Gidaris & Komodakis BMVC 2016] propose a new approach to tackle this problem that is based on an active strategy for generating box proposals.

Low-level Semantic Segmentation

Semantic segmentation is applicable both to 2D (images) and 3D data (depth maps, point clouds, meshes) . It can be low-level, at pixel, vertex or face level, as provided for instance by random forests or CNNs, possibly with regularization based on MRFs or deep learning. Or it can be high-level, with a structured, possibly hierarchical representation, as provided by parsing with a shape grammar (its counterpart being procedural modeling).

Pixelwise Segmentation with Auto-context. [Gadde et al. PAMI 2018] train a sequence of boosted decision trees using auto-context features and stacked generalization, yielding a segmentation accuracy which is better or comparable with all previous published methods, not only for 2D images but also for 3D point clouds and meshes. (Preliminary results on images only were reported in [Jampani et al. WACV 2015].)

Penalizing the Total Variation or the Total Boundary Size. [Landrieu & Obozinski SIIMS 2017] propose working-set/greedy algorithms to efficiently solve problems penalized respectively by the total variation on a general weighted graph and its l0 counterpart, the total level-set boundary size, when the piecewise-constant solutions have a small number of distinct level-sets, which is the case for semantic segmentation. They obtain significant speed-ups over state-of-the-art algorithms.

Accurate Labeling. To achieve accurate pixelwise image labeling, [Gidaris & Komodakis CVPR 2017] train a deep neural network that, given as input an initial estimate of the output labels and the input image, is able to predict a new refined estimate for the labels, . The method achieves state-of-the-art results on dense disparity estimation. It can also be applied to unordered semantics labels for semantic segmentation tasks.

Relaxed Calibration for Multiview Segmentation. In a multi-view video setting, object segmentation methods for dynamic scenes usually rely on geometric calibration to impose spatial shape constraints between viewpoints. [Djelouah et al. 3DV 2016] show that the calibration constraint can be relaxed while still getting competitive segmentation results. The method relies on new multi-view cotemporality constraints through motion correlation cues, in addition to common appearance features used by co-segmentation methods to identify co-instances of objects.

Structured Semantic Segmentation

Top-Down Parsing with Graph Grammars and MRFs. One of the main challenges of top-down parsing with shape grammars is the exploration of a large search space combining the structure of the object and the positions of its parts, requiring randomized or greedy algorithms that do not produce repeatable results. [Koziński & Marlet WACV 2014] propose to encode the possible object structures in a graph grammar and, for a given structure, to infer the position of parts using standard MAP-MRF techniques. This limits the application of the less reliable greedy or randomized optimization algorithm to structure inference.

Learning Shape Grammars. Parsing methods based on shape grammars suffer from the limits of handwritten rules, which are prone to errors and not scalable. [Gadde et al. IJCV 2016] propose a method to automatically learn shape grammars from segmentation samples. The learned grammars offer a faster parsing convergence while producing equal or more accurate parsing results compared to handcrafted grammars as well as to grammars learned by other methods.

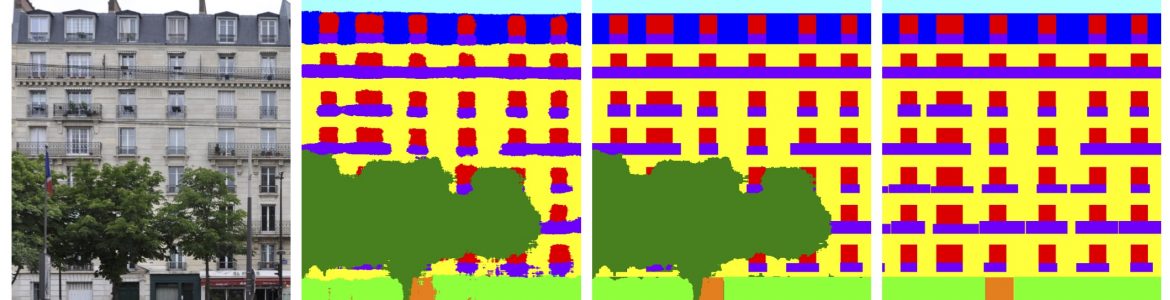

Relaxing Parsing Constraints and Dealing with Occlusions. Instead of exploring the procedural space of shapes derived from a grammar, [Koziński et al. ACCV 2014] formulate parsing as a linear binary program with user-defined shape priors. The algorithm produces plausible approximations of globally optimal segmentations without grammar sampling. Pushing the idea further, [Koziński et al. CVPR 2015] propose a new shape prior formalism for segmenting images with regularities such as facade images. It combines the simplicity of split grammars with unprecedented expressive power: the capability of encoding simultaneous alignment in two dimensions, facade occlusions and irregular boundaries between facade elements. The method is extended to simultaneously segment the visible and occluding objects, and recover a plausible structure of the occluded object.

Urban Procedural Modeling. On a number of occasions, it is desirable to design 3D models of buildings by hand, which is a notoriously difficult task for novices despite significant research effort to provide intuitive and automated systems. [Nishida et al. TOG 2016] propose an approach that associates sketch-based modeling and procedural modeling, which allows non-expert users to generate complex buildings in just a few minutes.

2D/3D Correspondence and Alignment

Finding correspondences and alignments in images (2D-2D) or between images with three-dimensional information (2D-3D) is a key component in the visual analysis of urban data, with direct applications such as place recognition and object detection. These tasks face two main challenges: (1) huge variations due to age, lighting or change of seasons, if not structure, and (2) the size of the search space due to the extent of cities and the variety of viewpoints. We have developed and improved a number of methods that address these challenges, with applications to place recognition, object detection and pose estimation.

2D-2D Correspondence and Alignment. Repetitive structures are notoriously hard to deal with, in particular for establishing correspondences in multi-view geometry or for bag-of-visual-words representations. Yet they constitute an important distinguishing feature for many places. [Torii et al. PAMI 2015] propose a specific representation of repeated structures suitable for scalable retrieval and geometric verification. [Rocco et al. CVPR 2017] address the problem of determining correspondences between two images in agreement with a geometric model such as an affine or thin-plate spline transformation, and estimating its parameters. [Dalens et al. submitted] identify visual differences between objects over time.

2D-3D Alignment at Scene level. [Aubry et al. TOG 2014] represent a 3D model of a scene by a small set of discriminative visual elements that are automatically learnt from rendered views and that can reliably be matched in 2D depictions, offering robust and scalable 2D-3D alignments, even with non-photorealistic representations. [Aubry et al. chapter LSVG 2015] provides more details and experiments. [Torii et al. CVPR 2015], extended in [Torii et al. PAMI 2017], observe that alignment becomes easier when both a query image and a database image depict the scene from approximately the same viewpoint. They develop a new place recognition approach that combines an efficient synthesis of novel views with a compact indexable image representation. Also with applications to place recognition and image retrieval, [Arandjelovic et al. CVPR 2016], extended in [Arandjelovic et al. PAMI 2017], propose a CNN-based approach that can be applied on very large-scale weakly labeled datasets.

2D-3D Alignment at Object Level. [Aubry et al. CVPR 2014] pose object category detection in images as a type of part-based 2D-to-3D alignment problem, and learn correspondences between real photographs and synthesized views of 3D CAD models. [Massa et al. CVPR 2016] shows how to adapt the features of natural images to better align with those of CAD-rendered views, which is critical to the success of 2D-3D exemplar detection. [Massa et al. BMVC 2016] compare various approaches for estimating object viewpoint in a unified setting, and consequently propose a new training method for joint detection and viewpoint estimation.

3D Reconstruction of Man-made Environments

Urban structures, in particular buildings, come with strong shape priors and regular patterns, which can be leveraged for analysis [Torii et al. PAMI 2015] and enforced for reconstruction. They also come with specific issues, such as textureless and specular areas, that break traditional 3D reconstruction methods.

Patch-Based Reconstruction. [Bourki et al. WACV 2017] propose an efficient patch-based method for the Multi-View Stereo (MVS) reconstruction of highly regular man-made scenes from calibrated, wide-baseline views and a sparse Structure-from-Motion (SfM) point cloud. The method is robust to textureless and specular areas.

Piecewise-Planar Reconstruction. [Boulch et al. CGF 2014] propose an effective method to impose a meaningful prior on piecewise-planar, watertight surface reconstruction, based on the regularization of the reconstructed surface w.r.t. the length of edges and the number of corners. This methods is also particular good at surface completion for unseen areas.

Shape Merging. Surface reconstruction from point clouds often relies on a primitive extraction step, that may be followed by a merging step because of a possible over-segmentation. [Boulch & Marlet ICPR 2014] propose statistical criteria, based on a single intuitive parameter, to decide whether or not two given surfaces (not just primitives) are to be considered as the same, and thus can be merged.

Reconstruction from Heterogeneous Data. New algorithms to where developed to combine the three main sources of urban data (lidar point clouds, mixed with aerial and ground shots) and manage the differences in resolution between pictures (aerial and ground), improving the accuracy and overall quality of the generated 3D models [Keriven techreport 2019].

Shape simplification. A hybrid geometry generation algorithm has also been designed. It consists in preserving the details of the geometry while simplifying flat surfaces. This geometric simplification also improves the ability to correctly semantize the model [Keriven techreport 2019].

Image-Based Rendering for Virtual Navigation

A 3D model allows to virtually navigate in a building or city. However, a high-quality navigation requires a level of 3D accuracy, completeness, compactness and knowledge of materials which is totally inaccessible to current data captures and 3D reconstruction techniques. Yet, a smooth, real-time, virtual navigation in an urban environment is possible using Image-based Rendering (IBR) techniques, relying only on partial information regarding 3D geometry and/or semantics.

Real-Time Quality IBR. The various IBR algorithms generate high-quality photo-realistic imagery but have different strengths and weaknesses, depending on 3D reconstruction quality and scene content. Using a Bayesian approach, [Ortiz-Cayon et al. 3DV 2015] propose a principled approach to select the algorithm with the best quality/speed trade-off in each image region. This reduces the cost of rendering significantly, allowing IBR to be run on a mobile device.

Dealing with Occlusion with Close Objects and View-Dependent Texturing. For indoor scenes, two important challenges are the compromise between compactness and fidelity of 3D information, especially regarding occlusion relationships when viewed up close, and the performance cost of using many photographs for view-dependent texturing of man-made materials. [Hedman et al. TOG 2016] propose a method based on different representations at different scales as well as tiled IBR, giving real-time performance while hardly sacrificing quality.

Dealing with Thin Structures. Another challenge concern thin structures such as fences, which generate occlusion artifacts. Based on simple geometric information provided by the user, [Thonat et al. CGF 2018] propose a multi-view segmentation algorithm for thin structures that extracts multi-view mattes together with clean background images and geometry. These are are used by a multi-layer rendering algorithm that allows free-viewpoint navigation, with significantly improved quality compared to previous solutions.

Dealing with Reflective Surfaces. IBR allows good-quality free-viewpoint navigation in urban scenes, but suffers from artifacts on poorly reconstructed objects, e.g., reflective surfaces such as cars. To alleviate this problem, [Ortiz-Cayon et al. 3DV 2016] propose a method that automatically identifies stock 3D models (using a previous result from the project [Gidaris & Komodakis ICCV 2015] ), aligns them in the 3D scene (leveraging on another result from the project [Massa et al. BMVC 2016] ) and performs morphing to better capture image contours.

Perspective and Multi-View Inpainting for Scene Editing. [Thonat et al. 3DV 2016] propose an inpainting-based method to remove objects such as people, cars and motorbikes from urban images (as provided by [Gidaris & Komodakis ICCV 2015]), with multi-view consistency. It enables a form of scene edition, with free-viewpoint IBR in the edited scenes as well as IBR scene editing, such as limited displacement of real objects. Developing on the idea, [Philip & Drettakis I3D 2018] provide correct perspective and multi-view coherence of inpainting results, that scales to large scenes.It is based on a local planar decomposition allowing a better coherence and a better quality.