Projet Semapolis — Analyse sémantique visuelle et reconstruction 3D d’environnements urbains

Le but du projet Semapolis (1/10/2013-30/09/2017) est de développer des techniques avancées d’analyse d’images et d’apprentissage à grande échelle pour la sémantisation de photos urbaines et la construction de modèles 3D sémantisés d’environnements urbains, avec un rendu visuel amélioré. Le projet Semapolis est cofinancé par l’Agence Nationale de la Recherche (ANR) dans le programme CONTINT 2013 avec la réference ANR-13-CORD-0003.

Les modèles 3D géométriques de villes ont une large gamme d’applications comme la navigation ou les décors virtuels réalistes pour les jeux vidéo et les films. Des acteurs comme Google, Microsoft et Apple ont commencé à produire de telles données. Cependant, elles ne consistent qu’en de simples surfaces, texturées à partir d’images. Cela limite leur usage dans les études urbaines et l’industrie de la construction, excluant des applications comme le diagnostic ou la simulation. En outre, géométrie et texture sont souvent fausses quand des parties sont invisibles ou discontinues, comme lors d’occultations par un arbre, une voiture ou un réverbère, objets omniprésents dans les scènes urbaines.

Objectifs initiaux (2013)

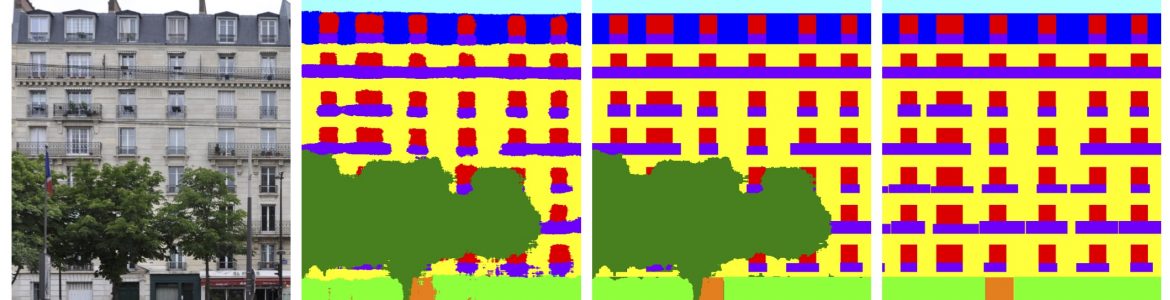

Nous voulons aller plus loin en produisant des modèles 3D sémantisés, qui identifient des éléments architecturaux tels que fenêtres, murs, toits, portes, etc. Les aprioris sémantiques sur les images analysées nous permettrons aussi de reconstruire des géométries et rendus plausibles pour les parties invisibles. La sémantique est utile dans un plus grand nombre de scénarios à l’échelle du bâtiment ou de la ville : diagnostic et simulation dans des projets de rénovation, étude d’impact précise de l’ombre portée sur des fenêtres, déploiement de panneaux solaires, etc. La navigation dans des villes virtuelles peut aussi être amélioré, avec des rendus visuels spécifiques aux objets identifiés. Les modèles peuvent également être compactés en encodant la répétition (p. ex. fenêtres) et en substituant aux textures d’origine des textures données par la sémantique ; cela permet une transmission moins coûteuse et plus rapide dans la faible bande passante de réseaux mobiles, et un stockage efficace sur GPS embarqué.

L’objectif principal du projet est de faire des avancées majeures dans les domaines suivants :

- Apprentissage pour la reconnaissance d’objets : Nous développerons des algorithmes innovants d’apprentissage à grande échelle pour reconnaître divers styles et éléments architecturaux dans des images. Ces méthodes seront capables d’exploiter de très grandes quantités de données mais ne nécessiteront que peu d’annotations manuelles (apprentissage faiblement supervisé).

- Apprentissage de grammaires de formes : Nous développeront des techniques pour apprendre des grammaires de formes stochastiques à partir d’exemples. Les grammaires apprises permettront de s’adapter à la grande variété de types de bâtiments sans toujours recourir à des experts. Grâce aux paramètres appris, les analyses seront aussi plus rapides, plus précises, plus robustes.

- Analyse grammaticale : Nous développeront de nouvelles méthodes de minimisation d’énergie sur la base d’indices visuels pour maîtriser le nombre exponentiel d’analyses. Les propriétés visuelles statistiques précédemment apprises seront agrégées pour évaluer avec précision les analyses.

- Reconstruction 3D sémantisée : Nous développerons des techniques robustes originales pour synchroniser une reconstruction 3D multi-vues avec l’analyse sémantique, garantissant ainsi des alignement de toits ou de fenêtres à l’angle de façades.

- Rendu visuel et sémantique : Nous développerons des méthodes de rendu à base d’images qui s’appuieront sur la sémantique de la scène pour améliorer la qualité graphique : estimation de profondeur, fusion adaptative, remplissage de trous et complétion de régions.

Pour valider notre recherche, nous conduirons des expériences sur des données concernant des grandes villes, en particulier Paris (grande quantité de panoramas, images plus denses et géoréférencées à plus petite échelle, plan cadastral, dates de construction).

Objectifs revisités (2017)

Semapolis a été imaginé en 2012-2013 sur la base de méthodes soit bien établies à cette époque mais offrant encore d’intéressantes perspectives d’amélioration (ex. modèles graphiques), soit encore relativement nouvelles et prometteuses (ex. rendu à base d’images, analyse syntaxique avec des grammaires de forme via des techniques d’apprentissage par renforcement).

Mais l’essor et les succès de l’apprentissage profond qui ont suivi la conception initiale du projet nous ont conduit à recomposer significativement la carte des outils méthodologiques pertinents, sans altérer toutefois la finalité applicative du projet. Ainsi, rapidement, les chercheurs de Semapolis se sont mis à explorer également des techniques générales de deep learning et leurs usages pour l’analyse et la reconstruction urbaines sémantisées. En ce qui concerne la navigation visuelle dans les environnements virtuels 3D, le projet est resté axé sur le rendu à base d’imagse (IBR), notamment qui exploitent des informations sémantiques inférées.

Des résultats majeurs ont été obtenus dans ces domaines, avec environ 40 publications internationales avec comité de lecure, la plupart de rang A et pour la moitié assorties de code et données.