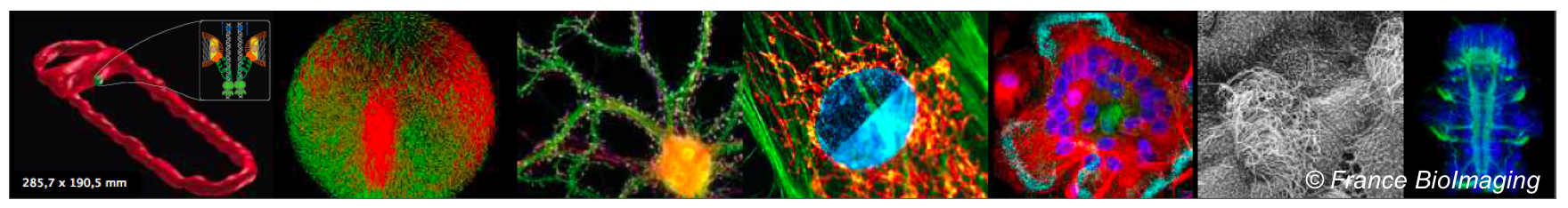

Microscopy image exploration is routinely performed on 2D screens, which limits human capacities to grasp volumetric complex biological dynamics. With the massive production of multidimensional images (3D+ Time, multi-channels) and derived images (e.g., restored images, segmentation maps, and object tracks), scientists need performant visualization and navigation methods to better handle information in videos. In this project, our objective was then to develop cutting-edge visualization and navigation methods and techniques to better explore temporal series of multi-valued volumetric images, with a strong focus on live cell imaging application domains. NAVISCOPE, built upon the strength of scientific visualization and artificial intelligence methods, aimed to provide systems capable to assist the scientist in getting a better understanding of massive amounts of information. The next generation visualization prototypes, potentially based on immersion and holography devices, will be extremely in the future helpful to detect, display, annotate space-time events in large volumes, while guiding the observer attention, and fluently interacting with the image contents.

Microscopy image exploration is routinely performed on 2D screens, which limits human capacities to grasp volumetric complex biological dynamics. With the massive production of multidimensional images (3D+ Time, multi-channels) and derived images (e.g., restored images, segmentation maps, and object tracks), scientists need performant visualization and navigation methods to better handle information in videos. In this project, our objective was then to develop cutting-edge visualization and navigation methods and techniques to better explore temporal series of multi-valued volumetric images, with a strong focus on live cell imaging application domains. NAVISCOPE, built upon the strength of scientific visualization and artificial intelligence methods, aimed to provide systems capable to assist the scientist in getting a better understanding of massive amounts of information. The next generation visualization prototypes, potentially based on immersion and holography devices, will be extremely in the future helpful to detect, display, annotate space-time events in large volumes, while guiding the observer attention, and fluently interacting with the image contents.

The teams involved in the consortium addressed the three following challenges:

-

Development of novel visualization methods capable of displaying heterogeneous dynamics with appropriate spatiotemporal representations and abstractions.

-

Design of machine learning strategies to localize regions of interest, make corrections on derived data (segmentation maps), and enrich annotations in datasets.

-

Conception of effective navigation and interaction methods for the exploration and analysis of sparse sets of localized intra-cellular events (membrane and cytoskeleton dynamics, organelles interactions…) and general biological processes (cell migration, cell-cell interactions, multicellular organisms development, etc.).