Last updates: MIG 2023 AWARDS RESULTS

BEST POSTER AWARD:

Enabling Physical VR Interaction with Deep RL Agents.

Paul Boursin, David Hamelin, James Burness and Marie-Paule Cani

BEST PRESENTATION AWARD:

Physical Simulation of Balance Recovery after a Push.

Alexis Jensen, Thomas Chatagnon, Niloofar Khoshsiyar, Daniele Reda, Michiel van de Panne, Charles Pontonnier and Julien Pettré

BEST PAPER AWARD (non-student category):

Real-time Computational Cinematographic Editing for Broadcasting of Volumetric-captured events: an Application to Ultimate Fighting.

Francois Bourel, Xi Wang, Ervin Teng, Valerio Ortenzi, Adam Myhill and Marc Christie

BEST PAPER AWARD (student category):

Primal Extended Position Based Dynamics for Hyperelasticity.

Yizhou Chen, Yushan Han, Jingyu Chen, Shiqian Ma, Ronald Fedkiw and Joseph Teran

ACM MIG 2023 is sponsored by

Click here to access the replay playlist of ACM MIG 2023 !

REGISTRATION (link)

| Registration type | Early Bird (–>oct. 31st 2023) | Late Registration (nov 1st 2023 –>) |

| On-site attendance | ||

| Non ACM member | 580€ | 680€ |

| ACM member | 500€ | 600€ |

| Student | 350€ | 450€ |

| Virtual attendance | ||

| Non ACM author | 183€ | 267€ |

| ACM author | 117€ | 200€ |

| Virtual attendee | free | free |

Please follow this link to get registered: https://cvent.me/NlEeen

For virtual presentation of papers and posters, please pick one of the paying virtual attendance registration. The virtual attendance is free, virtual meeting links will be posted here.

Registrations include:

- Tuesday welcome party in the Rennes downtown area (more info soon)

- Wednesday, thursday and friday lunches

- Wednesday poster session food and beverage

- Thursday social event

Visit to Mont Saint-Michel is planned on Saturday Nov. 18, but not included in registration fees. We plan to help you organizing a visit to the Mont Saint Michel. The visit will be at your cost, Mont Saint Michel is 1 hour drive from Rennes, our goal is to form a group for the visit, and to see whether we are enough to organize transportation, food and commented visit. We will propose a solution anyway, and will let you know about the cost before you confirm your participation. For you to get an idea about the cost, the Mont Saint Michel can be reached by public transport (bus from Rennes) for 30 euros (two-ways), the visit of the abbey is 11 euros. If we organize a commented tour and private transportation, the cost will be increased of course. If you are interested, please answer this form: https://forms.gle/V6uNMpRhVAbCDgZh8 !

Motion plays a crucial role in interactive applications, such as VR, AR, and video games. Characters move around, objects are manipulated or move due to physical constraints, entities are animated, and the camera moves through the scene. Motion is currently studied in many different research areas, including graphics and animation, game technology, robotics, simulation, computer vision, and also physics, psychology, and urban studies. Cross-fertilization between these communities can considerably advance the state-of-the-art in the area.

The goal of the Motion, Interaction and Games conference is to bring together researchers from this variety of fields to present their most recent results, to initiate collaborations, and to contribute to the establishment of the research area. The conference will consist of regular paper sessions, poster presentations, and as well as presentations by a selection of internationally renowned speakers in all areas related to interactive systems and simulation. The conference includes entertaining cultural and social events that foster casual and friendly interactions among the participants.

This year again, MIG will be held in a hybrid format, with a strong will to have you in person here in Rennes, for you to enjoy the most the conference program, face-to-face interactions with the community, the city of Rennes and the beautiful Brittany region! Nevertheless, you may choose to attend in person or to attend virtually to allow a maximum (virtual) attendance.

3RD CALL FOR PAPERS

The 16th annual ACM/SIGGRAPH conference on Motion, Interaction and Games (MIG 2023, formerly Motion in Games), an ACM SIGGRAPH Specialized Conferences, held in cooperation with Eurographics, will take place in Rennes, France, 15th – 17th Nov 2023.

The goal of the Motion, Interaction, and Games conference is to be a platform for bringing together researchers from interactive systems and animation, and have them present their most recent results, initiate collaborations, and contribute to the advancement of the research area. The conference will consist of regular paper sessions for long and short papers, and talks by a selection of internationally renowned speakers from Academia as well as from the Industry.

The conference organizers invite researchers to consider submitting their highest quality research for publication in MIG 2023.

Important dates

- Abstract submission: No abstract submission required*

- Long and Short Paper Submission Deadline:

7th July 202314th July 2023 (extended) - Long and Short Paper Acceptance Notification:

1st September 20235th September 20237th September 23:59 AoE - Long and Short Paper Camera Ready Deadline: 22nd September 2023

*New papers may be submitted even if no abstract was previously submitted. We already received a significant number of abstracts to facilitate the reviewing process, so no further abstract submissions are required. Thanks to those authors who submitted abstracts.

Poster

- Poster Submission Deadline:

12th September 202322nd September 2023 (extended) - Poster Notification:

22nd September 202329th September 2023 - Final Version of Accepted Posters:

29th September 2023TBD

Note: all submission deadlines are 23:59 AoE timezone (Anywhere on Earth).

Topics of Interest

Relevant topics include (but are not limited to):

- Animation Systems

- Animal locomotion

- Autonomous actors

- Behavioral animation, crowds & artificial life

- Clothes, skin and hair

- Deformable models

- Expressive animation

- Facial animation

- Facial feature analysis

- Game interaction and player experience

- Game technology

- Gesture recognition

- Group and crowd behaviour

- Human motion analysis

- Image-based animation

- Interaction in virtual and augmented reality

- Interactive animation systems

- Interactive storytelling in games

- Machine learning techniques for animation

- Motion capture & retargeting

- Motion control

- Motion in performing arts

- Motion in sports

- Motion rehabilitation systems

- Multimodal interaction: haptics, sound, etc

- Navigation & path planning

- Physics-based animation

- Real-time fluids

- Robotics

- User-adaptive interaction and personalization

- Virtual humans

- XR (AR, VR, MR) environments

We invite submissions of original, high-quality papers in any of the topics of interest (see above) or any related topic. Each submission should be 7-9 pages in length for a long paper, or 4-6 pages for a short paper. References are excluded from the page limit. They will be reviewed by our international program committee for technical quality, novelty, significance, and clarity. We encourage authors with content that can be fit into 6 pages to submit as a short paper and only to submit a long paper if the content requires it.

Submission Instructions

All submissions will be double-blind peer-reviewed by our international program committee for technical quality, novelty, significance, and clarity. Double-blind means that paper submissions must be anonymous and include the unique paper ID that will be assigned upon creating a submission using the online system.

Papers should not have previously appeared in, or be currently submitted to, any other conference or journal. For each accepted contribution, at least one of the authors must register for the conference.

All submissions will be considered for the Best Paper, Best Student Paper, and Best Presentation awards, which will be conferred during the conference. Authors of selected best papers will be referred (under validation) to submit extended and significantly revised versions for a Special Issue of Computers & Graphics journal.

We also invite submissions of poster papers in any of the topics of interest and related areas. Each submission should be 1-2 pages in length. Two types of work can be submitted directly for poster presentation:

- Work that has been published elsewhere but is of particular relevance to the MIG community can be submitted as a poster. This work and the venue in which it is published should be identified in the abstract;

- Work that is of interest to the MIG community but is not yet mature enough to appear as a paper.

Posters will not appear in the official MIG proceedings or in the ACM Digital library but will appear in an online database for distribution at author’s discretion. You can use any paper format, though the MIG paper format is recommended. In addition, you are welcome to submit supplementary material such as videos.

All submissions should be formatted using the SIGGRAPH formatting guidelines (sigconf). Latex template can be found here: https://www.acm.org/publications/proceedings-template (for the review version, you can use the command \documentclass[sigconf, screen, review, anonymous]{acmart})

All papers and posters should be submitted electronically to their respective tracks on EasyChair: https://easychair.org/conferences/?conf=mig2023

Supplementary Material

Due to the nature of the conference, we strongly encourage authors to submit supplementary materials (such as videos) with the size up to 200MB. They may be submitted electronically and will be made available to reviewers. For Video, we advise QuickTime MPEG-4 or DivX Version 6, and for still images, we advise JPG or PNG. If you use another format, you are not guaranteed that reviewers will view them. It is also allowed to have an appendix as supplementary material. These materials will accompany the final paper in the ACM Digital Library.

Keynotes

Johanna Pirker

Dr. Johanna Pirker is a computer scientist focusing on game development, research, and education and an active and strong voice of the local indie dev community. She has lengthy experience in designing, developing, and evaluating games and VR experiences and believes in them as tools to support learning, collaboration, and solving real problems. Johanna has started in the industry as QA tester at EA and still consults studios in the field of games user research. In 2011/12 she started researching and developing VR experiences at Massachusetts Institute of Technology. At the moment, she is is professor for media informatics at the Ludwig Maximilian University of Munich and Ass.Prof. for game development at TU Graz and researches games with a focus on AI, HCI, data analysis, and VR technologies. Johanna was listed on the Forbes 30 Under 30 list of science professionals.

Jonas Beskow

Jonas Beskow is a Professor of Speech Communication, specialising in Multimodal Embodied Systems at KTH in Stockholm. He is also a co-founder and Senior R&D Engineer at Furhat Robotics. His interests encompass modelling, synthesis, and understanding human communicative signals and behaviours, including speech, facial expressions, gestures, gaze, and the dynamics of face-to-face interaction. Specifically, he is passionate about integrating all these elements into machines and embodied agents, both physical and virtual, to enhance more engaging and dynamic interactions.

Prof Sylvia Pan is a Professor of Virtual Reality at Goldsmiths, University of London. She co-leads the SeeVR research group including 10 academics and researchers. She holds a PhD in Virtual Reality, and an MSc in Computer Graphics, both from UCL, and a BEng in Computer Science from Beihang University, Beijing, China. Before joining Goldsmiths in 2015, she worked as a research fellow at the Institute of Cognitive Neuroscience, and at the Computer Science Department of UCL. Her research interest is the use of Virtual Reality as a medium for real-time social interaction, in particular in the application areas of medical training and therapy. Her work in social anxiety in VR and moral decisions in VR has been featured multiple times in the media, including BBC Horizon, the New Scientist magazine, and the Wall Street Journal. Her 2017 Coursera VR specialisation attracted over 100,000 learners globally, and she co-leads on the MA/MSc in Virtual and Augmented Reality at Goldsmiths Computing.

How can model-based AI advance locomotion skills for legged characters?

Steve Tonneau is a lecturer at the University of Edinburgh. He defended his Phd in 2015 after 3 years in the INRIA/IRISA Mimetic research team, and pursued a post-doc in robotics at LAAS-CNRS in Toulouse, within the Gepetto team. His research focuses on motion planning based on the biomechanical analysis of motion invariants. Applications include computer graphics animation as well as robotics.

Local Industry (Wednesday November 15th, after poster session)

Mixed with the poster session, we propose to have presentations from companies from the Rennes area in the field of Computer Animation and Graphics! We are happy to have 3 great (but short) talks from:

Stéphane Donikian, Golaem (golaem.com)

Cyril Corvazier, Mercenaries Engineering (guerillarender.com)

Quentin Avril, InterDigital (interdigital.com)

International Program Comitee

Rahul Narain

Indian Institute of Technology Delhi

Panayiotis Charalambous

CYENS – Center of Excellence

Fotis Liarokapis

CYENS – Center of Excellence

Franck Multon

INRIA

Remi Ronfard

INRIA

Rinat Abdrashitov

Epic Games

Mikhail Bessmeltsev

University of Montreal

Tiberius Popa

Concordia Unviversity

Edmond S. L. Ho

University of Glasgow

Ludovic Hoyet

INRIA Rennes – Centre Bretagne Atlantique

Tianlu Mao

Institute of Computing Technology Chinese Academy of Sciences

Nuria Pelechano

Univesitat Politèctnica de Catalunya

Lauren Buck

Trinity College Dublin

Ylva Ferstl

Ubisoft

Yuting Ye

Reality Labs Research @ Meta

Damien Rohmer

Ecole Polytechnique

Brandon Haworth

University of Victoria

Claudia Esteves

Departamento de Matemáticas, Universidad de Guanajuato

Daniel Holden

Epic Games

He Wang

University College London

Eric Patterson

Clemson University

Ben Jones

University of Utah

Yorgos Chrysanthou

University of Cyprus

Eduard Zell

Bonn University

Marc Christie

Université de Rennes

Adam Bargteil

University of Maryland, Baltimore County

Steve Tonneau

University of Edinburgh

Ronan Boulic

Ecole Polytechnique Fédérale de Lausanne

Pei Xu

Clemson University

John Dingliana

Trinity College Dublin

Christos Mousas

Purdue University

Aline Normoyle

Bryn Mawr College

James Gain

University of Cape Town

Carol O’Sullivan

Trinity College Dublin

Matthias Teschner

University of Freiburg

Hang Ma

Simon Fraser University

Soraia Musse

PUCRS

Sylvie Gibet

Southern Britanny University

Xiaogang Jin

Zhejiang University

Catherine Pelachaud

CNRS – ISIR, Sorbonne

Cathy Ennis

TU Dublin

Zerrin Yumak

Utrecht University

Funda Durupinar Babur

University of Massachussetts Boston

Katja Zibrek

INRIA

Stephen Guy

University of Minnesota

Program

Full papers: 30 mins (20 mins presentation + 10 mins questions)

Short papers: 15 mins (10 mins presentation + 5 mins questions)

Please find a Powerpoint template for your slides here (note that using the template is optional):

Tuesday, November 14th

From 6:00PM

Welcome Reception, Delirium café, Rennes downtown area (see venue section for details)

Wednesday, November 15th

8:45AM – 9:15AM

9:15AM – 10:00AM

10:00AM – 11:00AM

11:15AM – 1:00PM

Conference center is open to access

Opening remarks

Keynote 1 – Sylvia Pan

Session: ML for Motion

Learning Robust and Scalable Motion Matching with Lipschitz Continuity and Sparse Mixture of Experts.

Tobias Kleanthous and Antonio Martini

Objective Evaluation Metric for Motion Generative Models: Validating Fréchet Motion Distance on Foot Skating and Over-smoothing Artifacts.

Antoine Maiorca, Hugo Bohy, Youngwoo Yoon and Thierry Dutoit

Motion-DVAE: Unsupervised learning for fast human motion denoising.

Guénolé Fiche, Simon Leglaive, Xavier Alameda-Pineda and Renaud Séguier

Reward Function Design for Crowd Simulation via Reinforcement Learning.

Ariel Kwiatkowski, Vicky Kalogeiton, Julien Pettre and Marie-Paule Cani

MeshGraphNetRP: Improving Generalization of GNN-based Cloth Simulation.

Emmanuel Ian Libao, Myeongjin Lee, Sumin Kim and Sung-Hee Lee

1:00PM – 2:30PM

Lunch Break

2:30PM – 3:30PM

3:45PM – 5:45PM

Keynote 2 – Steve Tonneau – How can model-based AI advance locomotion skills for legged characters?

Session: Games

Real-time Computational Cinematographic Editing for Broadcasting of Volumetric-captured events: an Application to Ultimate Fighting.

Francois Bourel, Xi Wang, Ervin Teng, Valerio Ortenzi, Adam Myhill and Marc Christie

Exploring Mid-air Gestural Interfaces for Children with ADHD.

Vera Remizova, Antti Sand, Oleg Špakov, Jani Lylykangas, Moshi Qin, Terhi Helminen, Fiia Takio, Kati Rantanen, Anneli Kylliäinen, Veikko Surakka and Yulia Gizatdinova

Player Exploration Patterns in Interactive Molecular Docking with Electrostatic Visual Cues.

Lin Liu, Torin Adamson, Lydia Tapia and Bruna Jacobson

Heat Simulation on Meshless Crafted-Made Shapes.

Auguste De Lambilly, Gabriel Benedetti, Nour Rizk, Chen Hanqi, Siyuan Huang, Junnan Qiu, David Louapre, Raphael Granier de Cassagnac and Damien Rohmer

Virtual Joystick Control Sensitivity and Usage Patterns in a Large-Scale Touchscreen-Based Mobile Game Study.

John Baxter, Torin Adamson, Yazied Hasan, Mohammad Yousefi, Lidia Obregon, Evan Carter and Lydia Tapia

6:15PM – 6:30PM

6:30PM – 7:30PM

7:30PM – …

Posters Fast Forward

Local Industry Keynote

Poster Session with Galettes Party, Inria Convention Center

Click here for the poster program

Thursday, November 16th

09:15AM – 11:30AM

Session: ML for faces

SoftDECA: Computationally Efficient Physics-Based Facial Animations.

Nicolas Wagner, Ulrich Schwanecke and Mario Botsch

Learned Real-time Facial Animation from Audiovisual Inputs for Low-end Devices.

Iñaki Navarro, Dario Kneubuehler, Tijmen Verhulsdonck, Eloi du Bois, William Welch, Charles Shang, Ian Sachs, Victor Zordan, Morgan McGuire and Kiran Bhat

FaceDiffuser: Speech-Driven Facial Animation Synthesis Using Diffusion.

Stefan Stan, Kazi Injamamul Haque and Zerrin Yumak

MUNCH: Modelling Unique ’N Controllable Heads.

Debayan Deb, Suvidha Tripathi and Pranit PuriGenerating Emotionally Expressive Look-At Animation. (moved to Friday)

Ylva Ferstl

Physical Simulation of Balance Recovery after a Push.

Alexis Jensen, Thomas Chatagnon, Niloofar Khoshsiyar, Daniele Reda, Michiel van de Panne, Charles Pontonnier and Julien Pettré

(moved from Friday to Thursday)

12:00PM – 13:00PM

14:30PM – 16:00PM

Keynote 3 – Johanna Pirker

Session: Virtual Reality

Avatar Tracking Control with Featherstone’s Algorithm and Newton-Euler Formulation for Inverse Dynamics.

Ken Sugimori, Hironori Mitake, Hirohito Sato and Shoichi Hasegawa

Real-Time Conversational Gaze Synthesis for Avatars.

Ryan Canales, Eakta Jain and Sophie Joerg

Effect of Avatar Clothing and User Personality on Group Dynamics in Virtual Reality.

Yuan He, Lauren Buck, Brendan Rooney and Rachel McDonnell

Designing Hand-held Controller-based Handshake Interaction in Social VR and Metaverse.

Filippo Gabriele Pratticò, Irene Checo, Alessandro Visconti, Adalberto Simeone and Fabrizio Lamberti

Runtime Motion Adaptation for Precise Character Locomotion.

Noureddine Gueddach, Steven Poulakos and Robert Sumner

17:00PM

From 19:30PM

City Tour, meeting point in front of the tourism office (Click here for the meetup position)

Dinner, La Fabrique Saint-Georges, downtown area

Friday, November 17th

09:15AM – 11:00AM

Session: Animation

Primal Extended Position Based Dynamics for Hyperelasticity.

Yizhou Chen, Yushan Han, Jingyu Chen, Shiqian Ma, Ronald Fedkiw and Joseph TeranPhysical Simulation of Balance Recovery after a Push.

Alexis Jensen, Thomas Chatagnon, Niloofar Khoshsiyar, Daniele Reda, Michiel van de Panne, Charles Pontonnier and Julien Pettré

SwimXYZ: A large-scale dataset of synthetic swimming motions and videos.

Guénolé Fiche, Vincent Sevestre, Camila Gonzalez-Barral, Simon Leglaive and Renaud Séguier

Video-Based Motion Retargeting Framework between Characters with Various Skeleton Structure.

Xin Huang and Takashi Kanai

Navigating With a Defensive Agent: Role Switching for Human Automation Collaboration.

Liz DiGioia, Torin Adamson, Yazied Hasan, Lidia Obregon, Evan Carter and Lydia Tapia

Generating Emotionally Expressive Look-At Animation.

11:30AM – 12:30PM

12:30PM – 13:00PM

Keynote 4 – Jonas Beskow

Closing remarks

Saturday, November 18th – OPTIONNAL (see Registrations section) – Mont-Saint Michel tour

9:00-18:00

More details soon

Venue

Rennes City, France

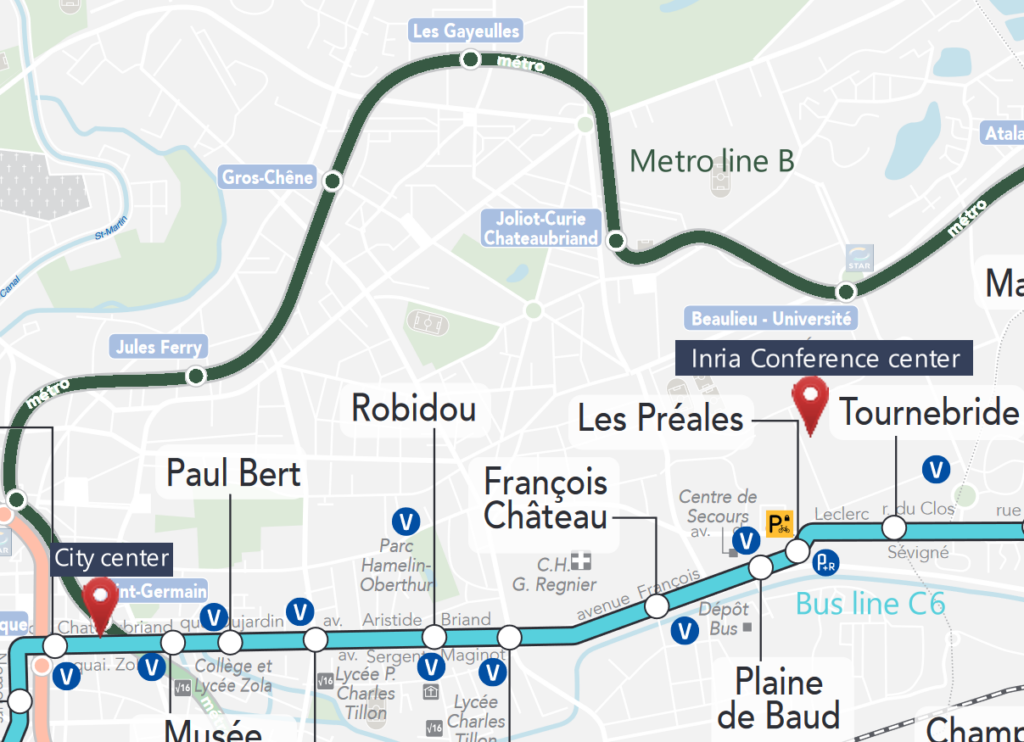

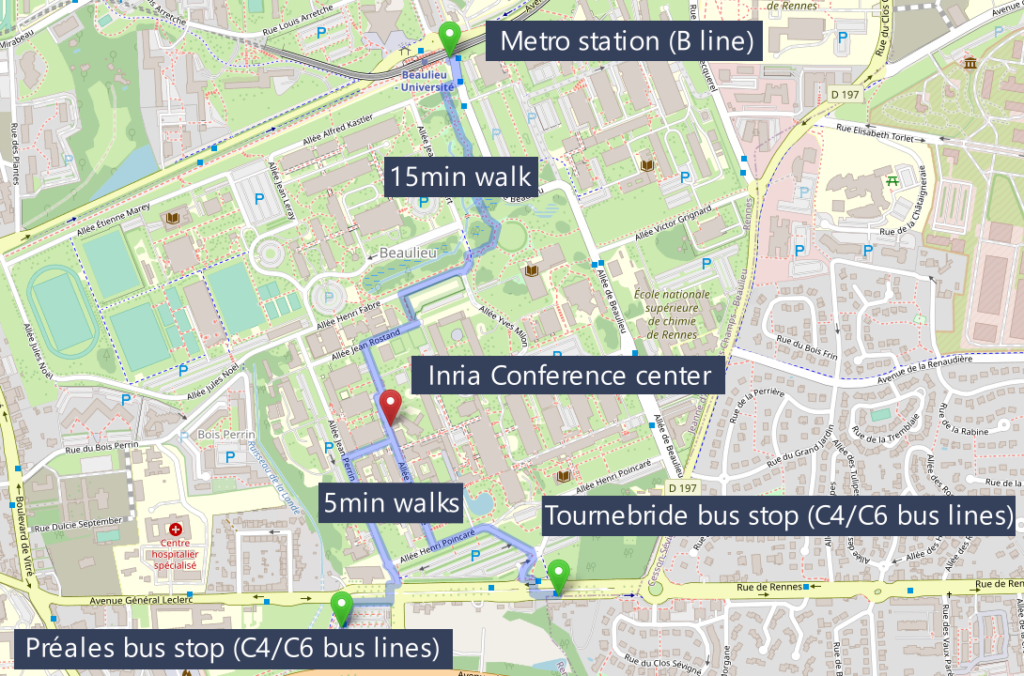

The conference will take place at the Inria Conference Center in Rennes, 263 Av. Général Leclerc, 35000 Rennes. However, we recommend that you stay in the downtown area, the Inria Conference Center is easily reached by public transport from the downtown area (20-30 minutes). Follow this link for more information about the city, and touristic attractions.

Recommended Hotels

Here is a non exhaustive Hotels we recommend for your stay:

- Les Chouettes Hostel

- Séjours et Affaires Appart’hôtel de Bretagne

- Hotel Mercure Place de Bretagne

- Campanile Rennes Centre Gare

- Adagio Access Rennes Centre

- Hôtel de Nemours

- Gîte du Passant Rennais

- Hôtel Anne de Bretagne

- Balthazar Hotel and Spa

- Mercure Rennes Centre Parlement

- Hôtel Atlantic

- Le Magic Hall

- Hôtel Le Saint-Antoine

- Novotel Rennes Centre Gare

- Garden Hotel

- Kyriad Rennes Centre Gare

- Mercure Rennes Centre Gare

- Ibis Styles Rennes Centre Gare Nord

- Novotel Rennes Centre Gare

- Garden Hotel

Inria Rennes Convention Center

ACM MIG Conference will take place in the Inria Convention Center, in the Beaulieu Campus Area. You can reach the campus or with the metro line B (Beaulieu Unviersité station +15 mins walk to reach the convention center) or with city bus lines C4 or C6 (Preales or Tournebride stops, +5 mins walk)

Social Events

Welcome Reception

Welcome Reception will take place at the Delirium café, Tuesday Nov. 14th from 6pm (until as late as you like). One drink and some platters of cold cuts or cheese will be served. Delirium café is located at 15, place des Lices in the downtown area (link to Google Maps).

Poster Session and Industrial Keynotes Wednesday nov. 15th at 19:30PM

Poster program:

ZStudio: Portable and Real-time Motion Capture Studio for Creators in the Metaverse.

Jung-Seok Cho, Seongchan Jeong, Geonwon Lee, Jaehyun Han and HyeRin Yoo.

Improving self-supervised 3D face reconstruction with few-shot transfer learning.

Martin Dornier, Philippe-Henri Gosselin, Christian Raymond, Yann Ricquebourg and Bertrand Coüasnon.

Disentangling Embedding Vectors for Controllable Facial Video Generation.

Matt Partridge.

A summary of VR quadruped embodiment using NeuroDog.

Donal Egan, Darren Cosker and Rachel McDonnell.

ShapeVerse: Physics-based Characters with Varied Body Shapes.

Bharat Vyas and Carol O’Sullivan.

How Much Do We Pay Attention? A Comparative Study of User Gaze and Synthetic Vision during Navigation.

Julia Melgare, Guido Mainardi, Eduardo Alvarado, Damien Rohmer, Marie-Paule Cani and Soraia Musse.

A Comparative Evaluation of Formed Team Perception when in Human-Human and Human-Autonomous Teams.

Chandni Murmu, Gnaanavarun Parthiban, Konnor McDowell and Nathan McNeese.

Exploring the Influence of Super-Functional Virtual Hands on Embodiment and Perception in Virtual Reality with Children.

Yuke Pi, Leif Johannsen, Simon Thurlbeck, Dorothy Cowie, Marco Gillies and Xueni Pan.

Improving motion matching for VR avatars by fusing inside-out tracking with outside-in 3D pose estimation.

George Fletcher, Donal Egan, Rachel McDonnell and Darren Cosker.

Enabling Physical VR Interaction with Deep RL Agents.

Paul Boursin, David Hamelin, James Burness and Marie-Paule Cani.

Sparse Motion Semantics for Contact-Aware Retargeting.

Théo Cheynel, Thomas Rossi, Baptiste Bellot-Gurlet, Damien Rohmer and Marie-Paule Cani.

Detailed Eye Region Capture and Animation.

Glenn Kerbiriou, Quentin Avril and Maud Marchal.

ArtWalks via Latent Diffusion Models.

Alberto Pennino, Majed El Helou, Daniel Vera Nieto and Fabio Zund.

A Perceptual Sensing System for Interactive Virtual Agents: towards Human-like Expressiveness and Reactiveness.

Alberto Jovane and Pierre Raimbaud.

Persuasive polite robots in free-standing conversational groups

Christopher Peters

Real-time self-contact sensitive finger and full-body animation of avatars with different morphologies and proportions

Mathias Delahaye, Bruno Herbelin and Ronan Boulic

City Tour, Thursday nov. 16th 17:00PM

Meetup in front of the tourisme office (Click here for position) at 17:00PM for a tour of Rennes before dinner !

Dinner in the downtown area, Thursday nov. 16th 19:30PM

Meetup at ‘La Fabrique Saint-Georges’ (Click here for position) at 19:30PM, for a convivial dinner in the downtown area of Rennes !

Conference Organization

Conference Chairs

- Julien Pettré, Inria, France

- Barbara Solenthaler, ETH Zurich, Switzerland

Program Chairs

- Rachel McDonnell, TCD, Ireland

- Christopher Peters, KTH, Sweden

Poster Chair

- Jovane Alberto, Trinity College Dublin

Main Contact

All questions about submissions should be emailed to Rachel McDonnell (ramcdonn (at) tcd.ie) and Christopher Peters (chpeters (at) kth.se).

Julien Pettré, Inria, France (julien.pettre (at) inria.fr)

All questions about posters should be emailed to Jovane Alberto, Trinity College Dublin (JOVANEA (at) tcd.ie)