Keynote #1

When Virtual Reality Editing Meets Network Streaming

Lucile Sassatelli

Abstract: The question of designing editing cuts to drive the user’s attention in cinematic Virtual Reality (VR) has been under active investigation in the last few years. If driving the user’s attention is critical for a director to ensure the story plot is understood, attention driving techniques are also under scrutiny in a different domain: the multimedia networking community. The development of VR contents is indeed persistently hindered by the difficulty of accessing them through regular streaming over the Internet.

In this talk, I will show how driving human attention can inform the design of streaming algorithms for VR. I will specifically present two such approaches, combining networking, human-machine interaction and editing. I will finally show how such interdisciplinary approaches open new directions of research to design network- and user-adaptive streaming algorithms for immersive contents.

Lucile Sassatelli has been an associate professor with Université Cote d’Azur since 2009. She obtained a PhD from Université of Cergy Pontoise, France, spent a one-year postdoc at MIT, Cambridge, USA, and obtained her professorial habilitation in 2019. She is with the I3S laboratory, where she focuses on the problems of multimedia transmission and specifically on virtual and augmented reality (VR and AR). In 2019, Lucile Sassatelli obtained a junior chair with Institut Universitaire de France (IUF) to conduct her 5-year research project aimed at providing wide access to immersive contents, even under insufficient network conditions.

Lucile Sassatelli has been an associate professor with Université Cote d’Azur since 2009. She obtained a PhD from Université of Cergy Pontoise, France, spent a one-year postdoc at MIT, Cambridge, USA, and obtained her professorial habilitation in 2019. She is with the I3S laboratory, where she focuses on the problems of multimedia transmission and specifically on virtual and augmented reality (VR and AR). In 2019, Lucile Sassatelli obtained a junior chair with Institut Universitaire de France (IUF) to conduct her 5-year research project aimed at providing wide access to immersive contents, even under insufficient network conditions.

Keynote #2

Reinventing movies: How do we tell stories in VR?

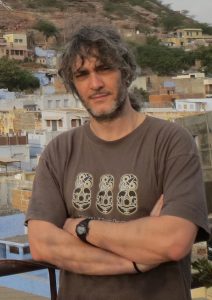

Diego Gutierrez

Abstract: Traditional cinematography has relied for over a century on a well-established set of editing rules, called continuity editing, to create a sense of situational continuity. Despite massive changes in visual content across cuts, viewers in general experience no trouble perceiving the discontinuous flow of information as a coherent set of events. However, Virtual Reality (VR) movies are intrinsically different from traditional movies in that the viewer controls the camera orientation at all times. As a consequence, common editing techniques that rely on camera orientations, zooms, etc., cannot be used. In this talk we will investigate key relevant questions to understand how well traditional movie editing carries over to VR, such as: Does the perception of continuity hold across edit boundaries? Under which conditions? Do viewers’ observational behavior change after the cuts? We will make connections with recent cognition studies and the event segmentation theory, which states that our brains segment continuous actions into a series of discrete, meaningful events. This theory may in principle explain why traditional movie editing has been working so wonderfully, and thus may hold the answers to redesigning movie cuts in VR as well. In addition, and related to the general question of how people explore immersive virtual environments, we will present the main insights a second, recent study, analyzing almost 2000 head and gaze trajectories when users explore stereoscopic omni-directional panoramas. We have made our database publicly available for other researchers.

Diego Gutierrez: Professor at the Universidad de Zaragoza in Spain, where he leads the Graphics and Imaging Lab. His areas of interest include physically based global illumination, computational imaging, and virtual reality. He has published over 100 papers in top journals (including Nature), and is a co-founder of the start-up DIVE Medical, for early diagnosis of visual impairments in children. He received in 2016 an ERC Consolidator Grant. He’s interested in studying and analyzing how traditional cinematographic techniques apply in VR, and how VR can be applied to learn about cognitive processes.

Diego Gutierrez: Professor at the Universidad de Zaragoza in Spain, where he leads the Graphics and Imaging Lab. His areas of interest include physically based global illumination, computational imaging, and virtual reality. He has published over 100 papers in top journals (including Nature), and is a co-founder of the start-up DIVE Medical, for early diagnosis of visual impairments in children. He received in 2016 an ERC Consolidator Grant. He’s interested in studying and analyzing how traditional cinematographic techniques apply in VR, and how VR can be applied to learn about cognitive processes.